Technology

The creators of a short video using Sora technology explain the strengths and limitations of AI-generated videos

OpenAI’s video generation tool Sora surprised the AI community in February with smooth, realistic video that appears to be well ahead of the competition. However, there are a lot of details unnoticed of the rigorously choreographed debut – details that were filled in by a filmmaker who was given advance access to create a short film starring Sora.

Shy Kids is a Toronto-based digital production team that has been chosen as one of only a few by OpenAI for the production of short movies essentially for OpenAI promotional purposes, although they got considerable creative freedom in creating an “air head”. In fxguide visual effects news interviewpost-production artist Patrick Cederberg described “actually using Sora” as part of his work.

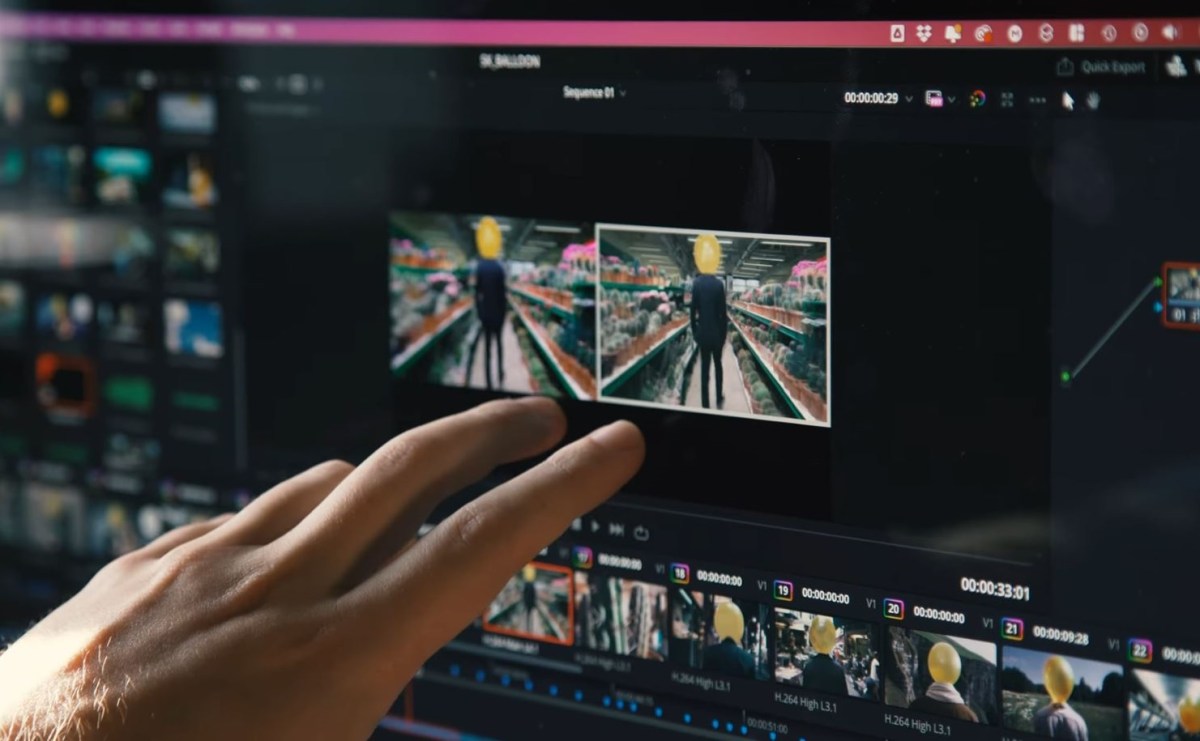

Perhaps the most significant takeaway for many is that this: while OpenAI’s post highlighting the short movies allows the reader to assume that they were created roughly fully shaped by Sora, the reality is that they were skilled productions, equipped with solid storyboarding, editing, color correction, and publish work equivalent to rotoscoping and visual effects. Just like Apple says “recorded on iPhone” but doesn’t show the studio setup, skilled lighting, and color work after the fact, Sora’s post only talks about what it allows people to do, not how they really did it.

The interview with Cederberg is interesting and quite non-technical, so in the event you are in any respect interested, go to fxguide and read. But listed below are some interesting facts about using Sora that tell us that, while impressive, this model is maybe less of a step forward than we thought.

Control is the most desired and most elusive thing at this moment. … The only thing we could achieve was simply hyper-descriptive tooltips. Clarifying the character’s attire, in addition to the type of balloon, was our way of ensuring consistency, since from shot to shot/generation to generation there just isn’t yet a feature arrange to offer full control over consistency.

In other words, things which are easy in traditional filmmaking, equivalent to selecting the color of a character’s clothing, require complex workarounds and controls in the generative system because each shot is created independently of the others. This could change, of course, but it surely’s definitely rather more labor intensive lately.

Sora’s prints also needed to be watched for unwanted elements: Cederberg described how the model routinely generated a face on a balloon that the foremost character has for a head, or a string hanging from the front. They needed to be removed by mail, one other time-consuming process in the event you couldn’t get a prompt to exclude them.

Precise timing and character or camera movements aren’t actually possible: “There is a little bit of temporal control over where these different actions are happening in a given generation, but it’s not precise… it’s kind of a shot in the dark,” Cederberga said.

For example, synchronizing a gesture equivalent to a wave is a very approximate and suggestion-based process, unlike manual animations. And a shot that appears like a panorama pointing upwards at a character’s body may or may not reflect what the filmmaker wanted – so on this case, the team rendered the shot in a vertical orientation and cropped it in post. The generated clips also often played in slow motion for no particular reason.

An example of Sora’s shot and its ending at a glance. Image credits: Shy children

In fact, the use of on a regular basis film language equivalent to “swipe right” and “tracking shot” was generally inconsistent, Cederberg found, which the team found quite surprising.

“Scientists weren’t really thinking like filmmakers before they turned to artists to play with this tool,” he said.

As a result, the team performed a whole lot of generations, each lasting 10–20 seconds, and ended up using only a handful. Cederberg estimated the ratio at 300:1, but of course we might all be surprised at the ratio for a regular photo.

A band, actually he made a little behind-the-scenes video explaining some of the issues they bumped into in the event you’re curious. Like much AI-related content, the comments are quite critical of the whole project — though not as insulting as the AI-powered promoting we have seen pilloried recently.

One last interesting caveat concerns copyright: If you ask Sora to share a Star Wars clip, he’ll refuse. And in the event you attempt to get around this “man in robes with a laser sword on a retrofuturistic spaceship” problem, he may also refuse because by some mechanism he recognizes what you are attempting to do. She also refused to take an “Aronofsky-style shot” or a “Hitchcock zoom.”

On the one hand, it makes total sense. However, this begs the query: If Sora knows what it’s, does that mean the model has been trained on that content to raised recognize that it’s infringing? OpenAI, which holds its training data cards near the vest – to the point of absurdity, as in CTO Mira Murati’s interview with Joanna Stern – he’ll almost definitely never tell us.

When it involves Sora and its use in filmmaking, it’s definitely a powerful and useful gizmo as an alternative, but its purpose just isn’t to “create films from whole footage.” Already. As one other villain famously said, “that will come later.”

Technology

AI chip startup DEEPX secures $80 million Series C at $529 million valuation

DEEPX is a South Korean AI chip-on-device startup that creates hardware and software for various AI applications in electronic devices. This week, the corporate announced that it had raised $80 million (KRW 108.5 billion) in a Series C round at a valuation of $529 million (KRW 723 billion), a rise of greater than eightfold in comparison with its Series B financing of roughly $15 thousands and thousands of dollars. in 2021

The Series C funding, which brings a complete amount of roughly $95 million, will support mass production of the startup’s inaugural products – DX-V1, DX-V3, DX-M1 and DX-H1 – in late 2024 for global distribution. The startup will even use the brand new capital to speed up the event and launch of a brand new generation of enormous language model (LLM) device solutions.

DEEP was founded in 2018 by CEO Lokwon Kim, who previously worked at Apple, Cisco Systems, IBM Thomas J. Watson Research Center and Broadcom.

The global edge AI, also called on-device AI, market size is: expected to achieve $107.47 billion by 2029The latest report shows that in 2021, this amount increased from $11.98 billion. “The market for AI on devices, excluding edge servers, requires the implementation of AI capabilities bypassing servers or the cloud,” Kim told TechCrunch. “The on-device AI market is growing with computer vision capabilities such as facial and voice recognition, smart mobility, robotics, IoT and physical security systems.”

Kim said that if mass production begins this yr, potential customers equivalent to end-product manufacturers could commercialize their products with DEEPX AI chips in 2025.

DEEPX, with about 65 employees, just isn’t the one company that has developed AI chip solutions. The Korean company competes with Hailo, which received a $120 million funding round last month; SiMa.ai, which closed on $70 million, also in April; and Axelera, a Belgian AI chip startup that raised $27 million in 2022.

Kim said his company’s differentiators include cost efficiency, energy efficiency and All-in-4 AI, a comprehensive solution for various AI applications. Its All-in-4 AI solutions include: DX-V1 and DX-V3, designed for vision systems in home appliances, surveillance camera systems, robotic vision systems and drones; in addition to the DX-M1 and DX-H1, that are designed for AI computing computers, AI servers, smart factories and AI amplification chips. Kim said DEEPX currently has greater than 259 patents pending within the U.S., China and South Korea.

“Nvidia’s GPGPU-based solutions are most cost-effective for services with large language models such as ChatGPT; the total power consumed by running GPUs has reached levels that exceed the electricity of the entire country,” Kim said. “This collaborative technology between server-scale AI and large AI models on devices is expected to significantly reduce energy consumption and costs compared to relying solely on data centers.”

The startup has no customers yet, but is working with over 100 potential customers and strategic partners equivalent to Hyundai Kia Motors Robotics Lab and Korean IT company POSCO DX to check the capabilities of DEEPX’s AI chips.

SkyLake Equity Partners, a South Korean technology private equity firm, led the newest investment with BNW Investments, a Korean private equity firm founded by the previous CEO of Samsung LED and Samsung Electronics’ memory chip unit. AJU IB and former sponsor Timefolio Asset Management also participated on this round.

Technology

Google created some of the first social media apps on Android, including Twitter and others

Here’s a bit of startup history that might not be widely known outside of tech firms themselves: the first versions of popular Android apps like Twitter were created by Google itself. This revelation got here through latest podcast with Twitter’s former senior director of product management, Sara Beykpour, now co-founder of artificial intelligence startup Particle.

In the podcast hosted by Lightspeed partner Michael Mignano, Beykpour reflects on his role in Twitter’s history. She explains how she began working at Twitter in 2009, initially as a tools engineer, when the company only had about 75 people. Beykpour later began working on Twitter on mobile devices, around the time that other third-party apps were gaining popularity on other platforms resembling BlackBerry and iOS. One of them, Loren Brichter’s Tweetie, was even acquired by Twitter and became the basis of its first official iOS app.

As for the Twitter app for Android, which comes from Google, Beykpour said.

The Twitter client for Android is “a demo app that Google built and gave us,” she said on the podcast. “They did it with all the popular social media apps at the time: Foursquare… Twitter… they all looked the same at first because Google wrote them all.”

Mignano interjected: “Wait, then step back; Explain this. So Google wanted companies to adopt Android to build apps for them?”

“Yes, exactly,” Beykpour replied.

Twitter then acquired the Android app built by Google and continued to develop it. She said Beykpour was the company’s second Android engineer.

In fact, Google detailed its work on the Twitter client for Android in a blog post from 2010but most news reports on time it didn’t credit the app with Google’s work, which made it a forgotten piece of web history. In Google’s post, the company explains the way it implemented Android best practices in the Twitter app. Beykpour told TechCrunch that the creator of the post, Virgil Dobjanschi, was a lead software engineer.

“If we had questions, we were supposed to ask them,” he recalled.

Beykpour also shared other stories from Twitter’s early days. For example, she worked on Twitter’s Vine video app (after returning to Twitter after working at Secret) and was under pressure to launch Vine on Android before Instagram launched its video product. She met that deadline by launching Vine about two weeks before the Instagram video, she said.

The latter “significantly” impacted Vine’s performance and, in accordance with Beykpour, led to the popular app’s demise.

“That was the day the writing was on the wall,” she said, regardless that it took years for Vine to finally shut down.

On Twitter, Beykpour led the shutdown of Vine – an app still so beloved that even Twitter/X’s latest owner, Elon Musk holds teasing about bringing it back. Beykpour, nevertheless, believes that Twitter made the right decision in selecting Vine, noting that the app lacks growth and is dear to take care of. He acknowledges that others might even see it otherwise, perhaps arguing that Vine didn’t have sufficient resources or management support. Ultimately, nevertheless, the shutdown got here right down to Vine’s impact on Twitter’s bottom line.

Beykpour also shared an interesting anecdote about working on Periscope. She joined the startup right after it was acquired by Twitter and after leaving Secret. She remembers that she needed to officially rejoin Twitter under a fake name to maintain the takeover a secret for some time.

On Twitter, she also mentioned the difficulty of obtaining resources to develop products and features for power users resembling journalists.

“Twitter really had a hard time defining its user,” she said, since it “used a lot of traditional OKRs and metrics.” But the fact was that “only a fraction of people tweet” and “of the fraction of people who tweet, a subset of people are responsible for the content that everyone wants to see” – this was difficult, in accordance with Beykpour. to measure.

Now at Particle, her experience constructing Twitter is getting used to create strategy for an AI-powered news app that goals to offer individuals with information that interests them and what’s happening around them.

“Particle is a reimagining of the way you consume daily news,” Beykpour says on the podcast. The app goals to offer a multi-perspective have a look at the news while providing access to high-quality journalism. The startup is searching for one other strategy to earn money on reports other than promoting, subscriptions or micropayments. However, the details of how Particle will do that are still under discussion. The startup is currently in talks with potential publishers on how one can reward them for his or her work.

Technology

Google funds a guaranteed income program in San Francisco

The pilot program will help greater than 200 families, lots of that are led by single women of color.

Google partners with San Francisco-area nonprofits to supply financial support to families battling homelessness.

The It all adds up The program will provide financial assistance to eligible families. To be eligible for the program, families should be near the top of rental assistance programs provided by local nonprofit agencies.

According to reports, 225 randomly chosen participants will receive monthly money payments of $1,000 for a 12 months, and a further 225 families will receive $50. Participants can use the cash nonetheless, they select.

“We hope this will provide a soft landing for families exiting our grant programs and help them maintain financial stability,” said Hamilton Families CEO Kyriell Noon. he told the San Francisco Chronicle..

Currently, roughly 30 families participate in the program. Additional participants shall be added every month until all 450 spots are filled. More than 70% of families currently in programs are led by single moms of color with children under five.

Kompas Family Services AND Hamilton families will operate a pilot program in San Francisco that shall be funded by Google and J-Buddy.

According to the program website, volThe initiative relies on a five-year study of the impact of a guaranteed basic income on families.

“Over the next five years, with the support of Google.org and J-PAL North America and in partnership with New York University, this pilot is on a mission to prove that housing stability is easier to achieve when families who have recently experienced homelessness have a little more room to breathe.”

NYU’s Housing Solutions Lab will just do that study the outcomes of the program to find out how effective the payments are in helping families stay in long-term homes. Scientists will do that too assess the impact on participants’ health and financial results.

Google’s participation in this program is an element of it $1 billion pledge to combat the housing crisis in the San Francisco area.

-

Business and Finance1 month ago

Business and Finance1 month agoThe Importance of Owning Your Distribution Media Platform

-

Press Release1 month ago

Press Release1 month agoCEO of 360WiSE Launches Mentorship Program in Overtown Miami FL

-

Business and Finance2 months ago

Business and Finance2 months ago360Wise Media and McDonald’s NY Tri-State Owner Operators Celebrate Success of “Faces of Black History” Campaign with Over 2 Million Event Visits

-

Press Release4 weeks ago

Press Release4 weeks agoU.S.-Africa Chamber of Commerce Appoints Robert Alexander of 360WiseMedia as Board Director

-

Film3 weeks ago

Film3 weeks agoTime Selects Taraji P. Henson to Host ‘Time100 Special’ in 2024 on ABC

-

Technology2 months ago

Technology2 months agoLiquid Death is just one of many VC-backed beverage startups poised to disrupt the Coca-Cola and Pepsi market

-

Video Games1 month ago

Video Games1 month agoTouchArcade Game of the Week: “Suika’s Game”

-

Music2 months ago

Music2 months agoPastor Mike Jr. calls Tye Tribbett ‘irresponsible’ for calling the institution of the Church ‘silly’