Technology

Fei-Fei Li chooses Google Cloud, where she led artificial intelligence, as the primary provider of computing solutions for World Labs

Cloud service providers are chasing AI unicorns, and the latest is Fei-Fei Li’s World Labs. The startup just chosen Google Cloud as its primary computing provider for training artificial intelligence models, a move that could possibly be value a whole lot of hundreds of thousands of dollars. But the company said Li’s tenure as Google Cloud’s chief artificial intelligence scientist was irrelevant.

During the company’s Google Cloud Startup Summit on Tuesday announced World Labs will devote a big portion of its funds to licensing GPU servers on the Google Cloud Platform and ultimately to training “spatially intelligent” artificial intelligence models.

A handful of well-funded startups constructing basic AI models are in high demand in the world of cloud services. The largest deals include OpenAI, which exclusively trains and runs AI models on Microsoft Azure, and Anthropic, which uses AWS and Google Cloud. These corporations often pay hundreds of thousands of dollars for computing services, and sooner or later they could need much more as they scale their artificial intelligence models. This makes them beneficial customers for Google, Microsoft, and AWS to construct relationships with from the starting.

World Labs is definitely constructing unique, multimodal AI models with significant computational needs. The startup just raised $230 million at a valuation of over $1 billion, in a deal led by A16Z, to construct global artificial intelligence models. Google Cloud’s general manager of startups and AI, James Lee, tells TechCrunch that World Labs’ AI models will sooner or later have the ability to process, generate and interact with video and geospatial data. World Labs calls these AI models “spatial intelligence.”

Li has deep ties to Google Cloud, having led the company’s artificial intelligence efforts in 2018. However, Google denies that this deal is a result of this relationship and rejects the concept that cloud services are only a commodity. Instead, Lee said the greater factor is services, such as a high-performance toolkit for scaling AI workloads and a big supply of AI chips.

“Fei-Fei is obviously a friend of GCP,” Lee said in an interview. “GCP wasn’t the only option they were considering. But for all the reasons we talked about – our AI-optimized infrastructure and ability to meet their scalability needs – they ultimately came to us.”

Google Cloud offers AI startups a alternative between proprietary AI chips, tensor processing units or TPUs, and Nvidia GPUs, which Google buys and that are in additional limited supply. Google Cloud is attempting to persuade more startups to coach AI models on TPUs, mainly to cut back dependence on Nvidia. All cloud service providers today are limited by the shortage of Nvidia GPUs, so many are constructing their very own AI chips to satisfy demand. Google Cloud says some startups are training and inferring exclusively on TPUs, but GPUs remain the industry’s favorite AI training chips.

As part of this agreement, World Labs has chosen to coach its artificial intelligence models on GPUs. However, Google Cloud didn’t say what prompted this decision.

“We had been working with Fei-Fei and her product team, and at this point in the product roadmap it made more sense for them to work with us on the GPU platform,” Lee said in an interview. “But that doesn’t necessarily mean it’s a permanent decision… Sometimes (startups) move to other platforms like TPU.”

Lee didn’t reveal how large World Labs’ GPU cluster is, but cloud providers often devote huge supercomputers to startups training artificial intelligence models. Google Cloud promised one other startup training basic AI models, Magic, a cluster with “tens of thousands of Blackwell GPUs,” each with more power than a high-end gaming PC.

These clusters are easier to vow than to deliver. According to reports, Microsoft is a competitor to Google’s cloud services struggles to satisfy insane computational demands OpenAI, forcing the startup to make use of other computing power options.

World Labs’ contract with Google Cloud is non-exclusive, which suggests the startup can still strike deals with other cloud service providers. Google Cloud, nevertheless, says most of its operations will proceed.

Technology

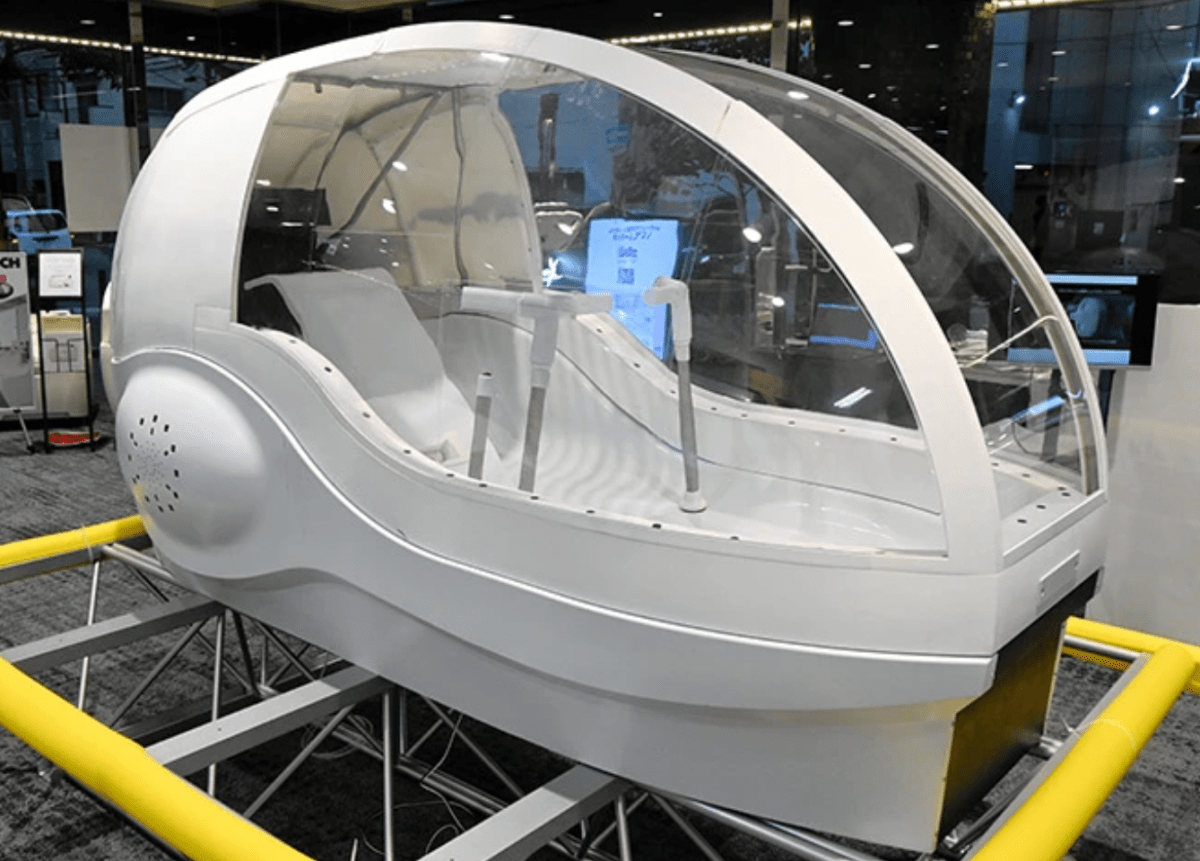

The company is currently developing washing machines for humans

Forget about cold baths. Washing machines for people may soon be a brand new solution.

According to at least one Japanese the oldest newspapersOsaka-based shower head maker Science has developed a cockpit-shaped device that fills with water when a bather sits on a seat in the center and measures an individual’s heart rate and other biological data using sensors to make sure the temperature is good. “It also projects images onto the inside of the transparent cover to make the person feel refreshed,” the power says.

The device, dubbed “Mirai Ningen Sentakuki” (the human washing machine of the longer term), may never go on sale. Indeed, for now the company’s plans are limited to the Osaka trade fair in April, where as much as eight people will have the option to experience a 15-minute “wash and dry” every day after first booking.

Apparently a version for home use is within the works.

Technology

Zepto raises another $350 million amid retail upheaval in India

Zepto has secured $350 million in latest financing, its third round of financing in six months, because the Indian high-speed trading startup strengthens its position against competitors ahead of a planned public offering next yr.

Indian family offices, high-net-worth individuals and asset manager Motilal Oswal invested in the round, maintaining Zepto’s $5 billion valuation. Motilal co-founder Raamdeo Agrawal, family offices Mankind Pharma, RP-Sanjiv Goenka, Cello, Haldiram’s, Sekhsaria and Kalyan, in addition to stars Amitabh Bachchan and Sachin Tendulkar are amongst those backing the brand new enterprise, which is India’s largest fully national primary round.

The funding push comes as Zepto rushes so as to add Indian investors to its capitalization table, with foreign ownership now exceeding two-thirds. TechCrunch first reported on the brand new round’s deliberations last month. The Mumbai-based startup has raised over $1.35 billion since June.

Fast commerce sales – delivering groceries and other items to customers’ doors in 10 minutes – will exceed $6 billion this yr in India. Morgan Stanley predicts that this market shall be value $42 billion by 2030, accounting for 18.4% of total e-commerce and a pair of.5% of retail sales. These strong growth prospects have forced established players including Flipkart, Myntra and Nykaa to cut back delivery times as they lose touch with specialized delivery apps.

While high-speed commerce has not taken off in many of the world, the model seems to work particularly well in India, where unorganized retail stores are ever-present.

High-speed trading platforms are creating “parallel trading for consumers seeking convenience” in India, Morgan Stanley wrote in a note this month.

Zepto and its rivals – Zomato-owned Blinkit, Swiggy-owned Instamart and Tata-owned BigBasket – currently operate on lower margins than traditional retail, and Morgan Stanley expects market leaders to realize contribution margins of 7-8% and adjusted EBITDA margins to greater than 5% by 2030. (Zepto currently spends about 35 million dollars monthly).

An investor presentation reviewed by TechCrunch shows that Zepto, which handles greater than 7 million total orders every day in greater than 17 cities, is heading in the right direction to realize annual sales of $2 billion. It anticipates 150% growth over the following 12 months, CEO Aadit Palicha told investors in August. The startup plans to go public in India next yr.

However, the rapid growth of high-speed trading has had a devastating impact on the mom-and-pop stores that dot hundreds of Indian cities, towns and villages.

According to the All India Federation of Consumer Products Distributors, about 200,000 local stores closed last yr, with 90,000 in major cities where high-speed trading is more prevalent.

The federation has warned that without regulatory intervention, more local shops shall be vulnerable to closure as fast trading platforms prioritize growth over sustainable practices.

Zepto said it has created job opportunities for tons of of hundreds of gig employees. “From day one, our vision has been to play a small role in nation building, create millions of jobs and offer better services to Indian consumers,” Palicha said in an announcement.

Regulatory challenges arise. Unless an e-commerce company is a majority shareholder of an Indian company or person, current regulations prevent it from operating on a listing model. Fast trading corporations don’t currently follow these rules.

Technology

Wiz acquires Dazz for $450 million to expand cybersecurity platform

Wizardone of the talked about names within the cybersecurity world, is making a major acquisition to expand its reach of cloud security products, especially amongst developers. This is buying Dazzlespecialist in solving security problems and risk management. Sources say the deal is valued at $450 million, which incorporates money and stock.

This is a leap within the startup’s latest round of funding. In July, we reported that Dazz had raised $50 million at a post-money valuation of just below $400 million.

Remediation and posture management – two areas of focus for Dazz – are key services within the cybersecurity market that Wiz hasn’t sorted in addition to it wanted.

“Dazz is a leader in this market, with the best talent and the best customers, which fits perfectly into the company culture,” Assaf Rappaport, CEO of Wiz, said in an interview.

Remediation, which refers to helping you understand and resolve vulnerabilities, shapes how an enterprise actually handles the various vulnerability alerts it could receive from the network. Posture management is a more preventive product: it allows a company to higher understand the scale, shape and performance of its network from a perspective, allowing it to construct higher security services around it.

Dazz will proceed to operate as a separate entity while it’s integrated into the larger Wiz stack. Wiz has made a reputation for itself as a “one-stop shop,” and Rappaport said the integrated offering will proceed to be a core a part of it.

He believes this contrasts with what number of other SaaS corporations are built. In the safety industry, there are, Rappaport said, “a lot of Frankenstein mashups where companies prioritize revenue over building a single technology stack that actually works as a platform.” It could be assumed that integration is much more necessary in cybersecurity than in other areas of enterprise IT.

Wiz and Dazz already had an in depth relationship before this deal. Merat Bahat — the CEO who co-founded Dazz with Tomer Schwartz and Yuval Ofir (CTO and VP of R&D, respectively) — worked closely with Assaf Rappaport at Microsoft, which acquired his previous startup Adallom.

After Rappaport left to found Wiz together with his former Adallom co-founders, CTO Ami Luttwak, VP of Product Yinon Costica and VP of R&D Roy Reznik, Bahat was one in all the primary investors. Similarly, when Bahat founded Dazz, Assaf was a small investor in it.

The connection goes deeper than work colleagues. Bahat and Rappaport are also close friends, and she or he was the second family of Mickey, Rappaport’s beloved dog, referred to as Chief Dog Officer Wiz (together with LinkedIn profile). Once the deal was done, the 2 faced two very sad events: each Bahat and Mika’s mother died.

“We hope for a new chapter of positivity,” Bahat said. The cycle of life does indeed proceed.

Rumors of this takeover began to appear earlier this month; Rappaport confirmed that they then began talking seriously.

But that is not the one M&A conversation Wiz has gotten involved in. Earlier this 12 months, Google tried to buy Wiz itself for $23 billion to construct a major cybersecurity business. Wiz walked away from the deal, which might have been the biggest in Google’s history, partly because Rappaport believed Wiz could turn into a fair larger company by itself terms. And that is what this agreement goals to do.

This acquisition is a test for Wiz, which earlier this 12 months filled its coffers with $1 billion solely for M&A purposes (it has raised almost $2 billion in total, and we hear the subsequent round will close in just a few weeks). . Other offers included purchasing Gem security for $350 million, but Dazz is its largest acquisition ever.

More mergers and acquisitions could also be coming. “We believe next year will be an acquisition year for us,” Rappaport said.

In an interview with TC, Luttwak said that one in all Wiz’s priorities now’s to create more tools for developers that have in mind what they need to do their jobs.

Enterprises have made significant investments in cloud services to speed up operations and make their IT more agile, but this shift has include a significantly modified security profile for these organizations: network and data architectures are more complex and attack surfaces are larger, creating opportunities for malicious hackers to find ways to to hack into these systems. Artificial intelligence makes all of this far more difficult when it comes to malicious attackers. (It’s also a chance: the brand new generation of tools for our defense relies on artificial intelligence.)

Wiz’s unique selling point is its all-in-one approach. Drawing data from AWS, Azure, Google Cloud and other cloud environments, Wiz scans applications, data and network processes for security risk aspects and provides its users with a series of detailed views to understand where these threats occur, offering over a dozen products covering the areas, corresponding to code security, container environment security, and provide chain security, in addition to quite a few partner integrations for those working with other vendors (or to enable features that Wiz doesn’t offer directly).

Indeed, Wiz offered some extent of repair to help prioritize and fix problems, but as Luttwak said, the Dazz product is solely higher.

“We now have a platform that actually provides a 360-degree view of risk across infrastructure and applications,” he said. “Dazz is a leader in attack surface management, the ability to collect vulnerability signals from the application layer across the entire stack and build the most incredible context that allows you to trace the situation back to engineers to help with remediation.”

For Dazz’s part, once I interviewed Bahat in July 2024, when Dazz raised $50 million at a $350 million valuation, she extolled the virtues of constructing strong solutions and this week said the third quarter was “amazing.”

“But market dynamics are what trigger these types of transactions,” she said. She confirmed that Dazz had also received takeover offers from other corporations. “If you think about the customers and joint customers that we have with Wiz, it makes sense for them to have it on one platform.”

And a few of Dazz’s competitors are still going it alone: Cyera, like Dazz, an authority in attack surface management, just yesterday announced a rise of $300 million at a valuation of $5 billion (which confirms our information). But what’s going to he do with this money? Make acquisitions, after all.

Wiz says it currently has annual recurring revenue of $500 million (it has a goal of $1 billion ARR next 12 months) and has greater than 45% of its Fortune 100 customers. Dazz said ARR is within the tens of hundreds of thousands of dollars and currently growing 500% on a customer base of roughly 100 organizations.

-

Press Release8 months ago

Press Release8 months agoCEO of 360WiSE Launches Mentorship Program in Overtown Miami FL

-

Business and Finance6 months ago

Business and Finance6 months agoThe Importance of Owning Your Distribution Media Platform

-

Press Release7 months ago

Press Release7 months agoU.S.-Africa Chamber of Commerce Appoints Robert Alexander of 360WiseMedia as Board Director

-

Business and Finance8 months ago

Business and Finance8 months ago360Wise Media and McDonald’s NY Tri-State Owner Operators Celebrate Success of “Faces of Black History” Campaign with Over 2 Million Event Visits

-

Ben Crump7 months ago

Ben Crump7 months agoAnother lawsuit accuses Google of bias against Black minority employees

-

Fitness7 months ago

Fitness7 months agoBlack sportswear brands for your 2024 fitness journey

-

Theater8 months ago

Theater8 months agoApplications open for the 2020-2021 Soul Producing National Black Theater residency – Black Theater Matters

-

Ben Crump8 months ago

Ben Crump8 months agoHenrietta Lacks’ family members reach an agreement after her cells undergo advanced medical tests