Technology

Elon Musk’s X Could Still Be Sanctioned for Training Grok on Europeans’ Data

Earlier this week, the EU’s top privacy regulator wrapped up legal proceedings over how X processed user data to coach its Grok AI chatbot, however the saga isn’t over yet for the Elon Musk-owned social media platform formerly referred to as Twitter. Ireland’s Data Protection Commission (DPC) confirmed to TechCrunch that it has received — and can “investigate” — plenty of complaints filed under the General Data Protection Regulation (GDPR).

“The DPC will now investigate the extent to which any processing that took place complies with the relevant provisions of the GDPR,” the regulator told TechCrunch. “If, as a result of that investigation, it is determined that TUIC (Twitter International Unlimited Company, as X’s principal Irish subsidiary is still called) has breached the GDPR, the DPC will consider whether the exercise of any of the remedial powers is justified, and if so, which one(s).”

X agreed to suspend processing data for Grok training in early August. X’s commitment was then made everlasting earlier this week. That agreement required X to delete and stop using European user data for training its AIs that it collected between May 7, 2024, and August 1, 2024, based on a replica obtained by TechCrunch. However, it’s now clear that X has no obligation to delete any AI models trained on that data.

So far, X has not faced any sanctions from the DPC for processing Europeans’ personal data to coach Grok without people’s consent – despite urgent legal motion by the DPC to dam the info collection. Fines under the GDPR will be severe, reaching as much as 4% of world annual turnover. (Considering that the corporate’s revenues are currently free falland will struggle to succeed in $500 million this yr, judging by its published quarterly results, which could possibly be particularly painful.)

Regulators even have the facility to order operational changes by demanding that violations stop. However, investigating complaints and enforcing them can take a protracted time—even years.

This is significant because while X has been forced to stop using Europeans’ data to coach Grok, it could actually still run any AI models it has already trained on data from individuals who haven’t consented to its use — with none urgent intervention or sanctions to forestall this.

When asked whether the commitment the DPC obtained from X last month required X to delete any AI models trained on Europeans’ data, the DPC confirmed to us that it didn’t: “The commitment does not require TUIC to take that action; it required TUIC to permanently cease processing the datasets covered by the commitment,” the spokesperson said.

Some might say it is a clever way for X (or other model trainers) to get around EU privacy rules: Step 1) Silently use people’s data; Step 2) use it to coach AI models; and — when the cat’s out of the bag and regulators eventually come knocking — commit to deleting the *data* while leaving the trained AI models intact. Step 3) Profit based on Grok!?

When asked about this risk, the DPC replied that the aim of the urgent legal proceedings was to handle “significant concerns” that X’s processing of EU and EEA user data to coach Grok “gave rise to risks to the fundamental rights and freedoms of data subjects”. However, it didn’t explain why it didn’t have the identical urgent concerns concerning the risks to the basic rights and freedoms of Europeans resulting from their information being placed on Grok.

Generative AI tools are notorious for producing false information. Musk’s inversion of that category can be deliberately rude—or “anti-woke,” as he calls it. That could raise the stakes within the sorts of content it could produce about users whose data was ingested to coach the bot.

One reason the Irish regulator could also be more cautious about tips on how to take care of the difficulty is that these AI tools are still relatively latest. There can be uncertainty amongst European privacy watchdogs about tips on how to implement GDPR on such a brand new technology. It can be unclear whether the regulation’s powers would come with the power to order the deletion of an AI model if the technology was trained on data processed unlawfully.

However, as complaints on this area proceed to multiply, data protection authorities will ultimately need to face the issue of artificial intelligence.

Cucumber spoilage

In separate news overnight Friday, the news emerged that the pinnacle of Global X has left. Reuters Agency announced the departure of long-time worker Nick Pickles, a British national who spent a decade at Twitter and rose through the ranks during Musk’s tenure.

IN write to XPickles says he made the choice to go away “a few months ago” but didn’t provide details on his reasons for leaving.

But it’s clear the corporate has quite a bit on its plate — including coping with a ban in Brazil and the political backlash within the U.K. over its role in spreading disinformation related to last month’s unrest there, with Musk having a private penchant for adding fuel to the fireplace (including posting on X suggesting that “civil war is inevitable” for the U.K.).

In the EU, X can be under investigation under the bloc’s content moderation framework, with the primary batch of complaints concerning the Digital Services Act filed in July. Musk was also recently singled out for a private warning in an open letter written by the bloc’s Internal Markets Commissioner, Thierry Breton — to which the chaos-loving billionaire decided to reply with an offensive meme.

Technology

Revolut will introduce mortgage loans, smart ATMs and business lending products

Revolutthe London-based fintech unicorn shared several elements of the corporate’s 2025 roadmap at a company event in London on Friday. One of the corporate’s important goals for next yr will be to introduce an AI-enabled assistant that will help its 50 million customers navigate financial apps, manage money and customize software.

Considering that artificial intelligence is at the middle of everyone’s attention, this move shouldn’t be surprising. But an AI assistant could actually help differentiate Revolut from traditional banking services, which have been slower to adapt to latest technologies.

When Revolut launched its app almost 10 years ago, many individuals discovered the concept of debit cards with real-time payment notifications. Users may lock the cardboard from the app.

Many banks now can help you control your card using your phone. However, they’re unlikely to supply AI features that might be useful yet.

In addition to the AI assistant, Revolut announced that it will introduce branded ATMs to the market. These will end in money being spent (obviously), but in addition cards – which could encourage latest sign-ups.

Revolut said it plans so as to add facial recognition features to its ATMs in the longer term, which could help with authentication without using the same old card and PIN protocol. It will be interesting to see the way it implements this technology in a way that complies with European Union data protection regulations, which require explicit consent to make use of biometric data for identification purposes.

According to the corporate, Revolut ATMs will start appearing in Spain in early 2025.

Revolut has had a banking license in Europe for a while, which implies it may offer lending products to its retail customers. It already offers bank cards and personal loans in some countries.

Now the corporate plans to expand into mortgage loans – some of the popular lending products in Europe – with an emphasis on speed. If it’s an easy request, customers should generally expect immediate approval and a final offer inside one business day. However, mortgages are rarely easy, so it will be interesting to see if Revolut overpromises.

It appears that the mortgage market rollout will be slow. Revolut said it was starting in Lithuania, with Ireland and France expected to follow suit. Although all these premieres are scheduled for 2025.

Finally, Revolut intends to expand its business offering in Europe with its first loan products and savings accounts. In the payments space, it will enable business customers to supply “buy now, pay later” payment options.

Revolut will introduce Revolut kiosks with biometric payments especially for restaurants and stores.

If all these features seem overwhelming, it’s because Revolut is consistently committed to product development, rolling out latest features quickly. And 2025 looks no different.

Technology

Flipkart co-founder Binny Bansal is leaving PhonePe’s board

Flipkart co-founder Binny Bansal has stepped down three-quarters from PhonePe’s board after making an identical move on the e-commerce giant.

Bengaluru-based PhonePe said it has appointed Manish Sabharwal, executive director at recruitment and human resources firm Teamlease, as an independent director and chairman of the audit committee.

Bansal played a key role in Flipkart’s acquisition of PhonePe in 2016 and has since served on the fintech’s board. The Walmart-backed startup, which operates India’s hottest mobile payment app, spun off from Flipkart in 2022 and was valued at $12 billion in funding rounds that raised about $850 million last 12 months.

Bansal still holds about 1% of PhonePe. Neither party explained why they were leaving the board.

“I would like to express my heartfelt gratitude to Binny Bansal for being one of the first and staunchest supporters of PhonePe,” Sameer Nigam, co-founder and CEO of PhonePe, said in a press release. His lively involvement, strategic advice and private mentoring have profoundly enriched our discussions. We will miss Binny!”

Technology

The company is currently developing washing machines for humans

Forget about cold baths. Washing machines for people may soon be a brand new solution.

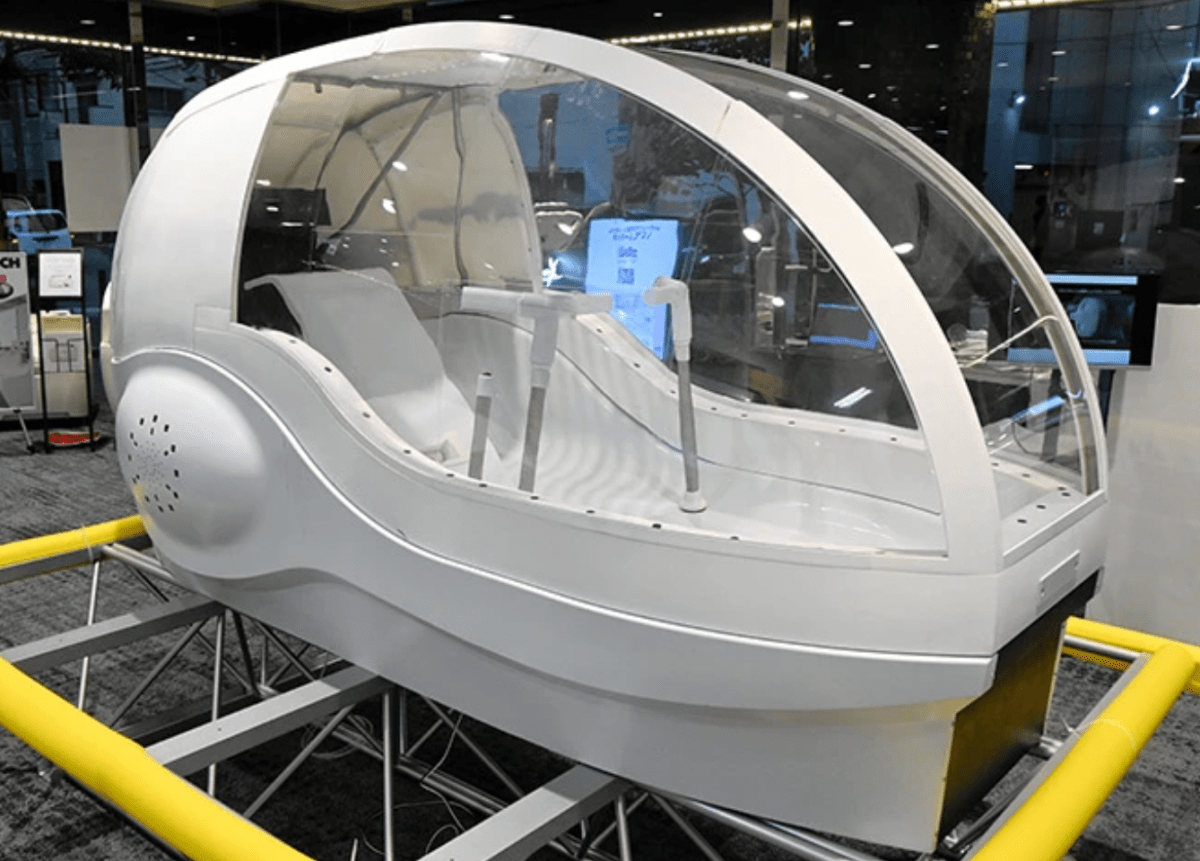

According to at least one Japanese the oldest newspapersOsaka-based shower head maker Science has developed a cockpit-shaped device that fills with water when a bather sits on a seat in the center and measures an individual’s heart rate and other biological data using sensors to make sure the temperature is good. “It also projects images onto the inside of the transparent cover to make the person feel refreshed,” the power says.

The device, dubbed “Mirai Ningen Sentakuki” (the human washing machine of the longer term), may never go on sale. Indeed, for now the company’s plans are limited to the Osaka trade fair in April, where as much as eight people will have the option to experience a 15-minute “wash and dry” every day after first booking.

Apparently a version for home use is within the works.

-

Press Release8 months ago

Press Release8 months agoCEO of 360WiSE Launches Mentorship Program in Overtown Miami FL

-

Business and Finance6 months ago

Business and Finance6 months agoThe Importance of Owning Your Distribution Media Platform

-

Press Release7 months ago

Press Release7 months agoU.S.-Africa Chamber of Commerce Appoints Robert Alexander of 360WiseMedia as Board Director

-

Business and Finance8 months ago

Business and Finance8 months ago360Wise Media and McDonald’s NY Tri-State Owner Operators Celebrate Success of “Faces of Black History” Campaign with Over 2 Million Event Visits

-

Ben Crump7 months ago

Ben Crump7 months agoAnother lawsuit accuses Google of bias against Black minority employees

-

Fitness7 months ago

Fitness7 months agoBlack sportswear brands for your 2024 fitness journey

-

Theater8 months ago

Theater8 months agoApplications open for the 2020-2021 Soul Producing National Black Theater residency – Black Theater Matters

-

Ben Crump8 months ago

Ben Crump8 months agoHenrietta Lacks’ family members reach an agreement after her cells undergo advanced medical tests