Technology

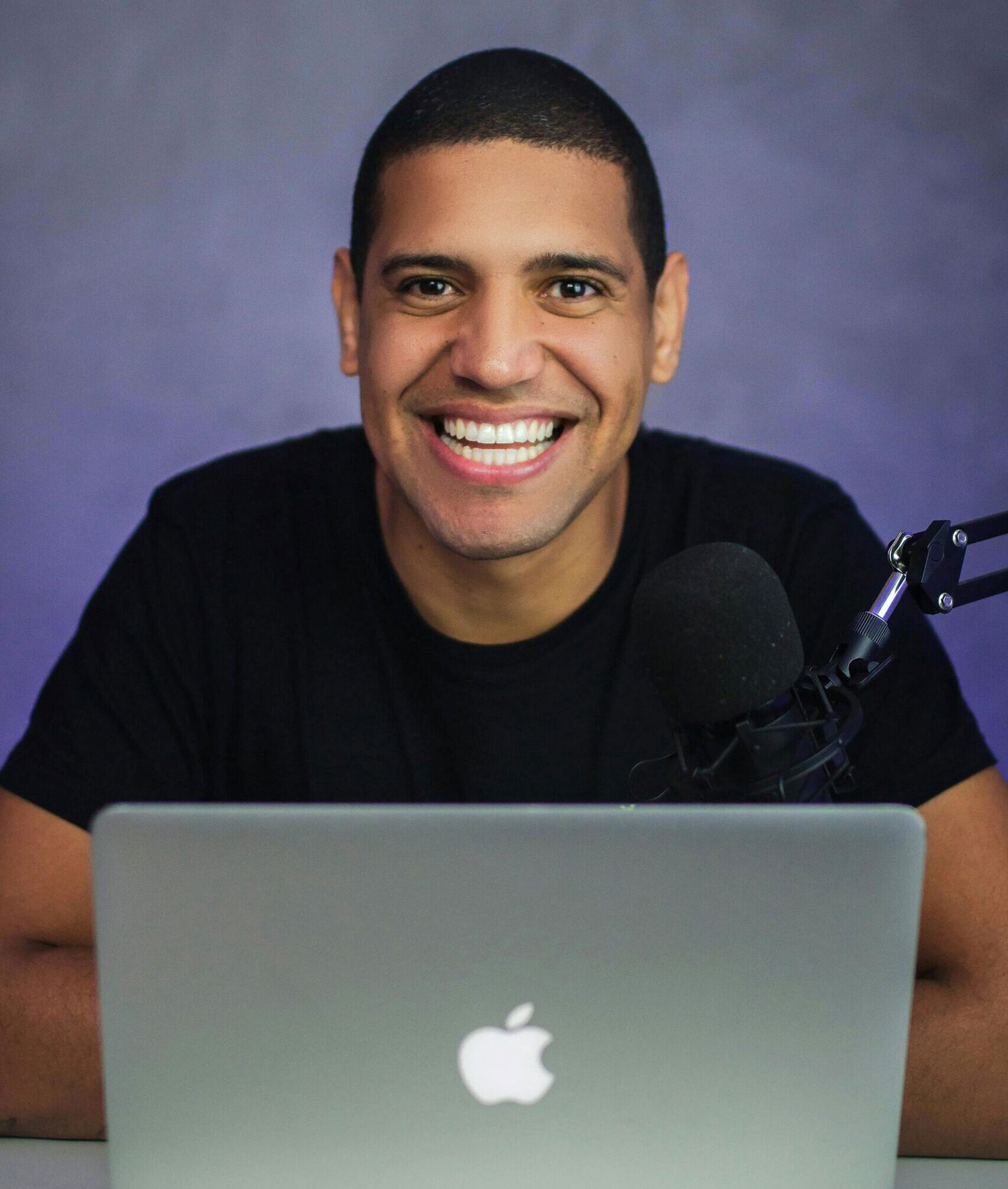

AWS CEO Matt Garman on generative AI, open source, and shutdown services

It was quite a surprise when Adam Selipsky stepped down as CEO of Amazon’s AWS cloud computing unit. Perhaps an equally big surprise was that he was replaced by Matt Garman. Garman joined Amazon as an intern in 2005 and became a full-time worker in 2006, working on early AWS products. Few people know the corporate higher than Garman, whose last position before becoming CEO was as senior vp of sales, marketing and global services for AWS.

Garman told me in an interview last week that he hasn’t made any significant changes to the organization yet. “Not much has changed in the organization. “The business is doing quite well, so there is no need to make huge changes to anything we are focusing on,” he said. However, he identified several areas where he believes the corporate must focus and where he sees opportunities for AWS.

Emphasize start-ups and rapid innovation

One of them, somewhat surprisingly, is startups. “I think we have evolved as an organization. … In the early days of AWS, our main focus was on how to really attract developers and startups, and we got a lot of traction there from the beginning,” he explained. “And then we started thinking about how do we appeal to larger businesses, how do we appeal to governments, how do we appeal to regulated sectors around the world? And I think one of the things that I just emphasized again – it’s not really a change – but I just emphasized that we can’t lose focus on startups and developers. We have to do all these things.”

The second area he wants the team to focus on is maintaining with the whirlwind of change within the industry.

“I even have really emphasized with the team how essential it’s for us to proceed to remain on the forefront that we’ve by way of the set of services, capabilities and features and functions that we’ve today – and proceed to lean forward and construct the plan motion involving real innovation,” he said. “I think the reason customers use AWS today is because we have the best and broadest set of services. The reason people turn to us today is because we continue to deliver industry-leading security and operational efficiency by far, and help them innovate and move faster. We must continue to implement the action plan. “It’s not likely a change in itself, but that is probably what I highlighted essentially the most: how essential it’s for us to take care of the extent of innovation and the speed at which we deliver products.”

When I asked him if he thought possibly the corporate hadn’t innovated fast enough up to now, he said no. “I think the pace of innovation is only going to accelerate, so it’s just important to emphasize that we also need to accelerate the pace of innovation. It’s not that we’re losing it; it is simply an emphasis on how much we need to accelerate given the pace of technology.”

Generative Artificial Intelligence in AWS

With the emergence of generative AI and technology changing rapidly, AWS must be “on the cutting edge of all of them,” he said.

Shortly after ChatGPT’s launch, many experts questioned whether AWS was too slow to launch generative AI tools on its own and left the door open to competitors like Google Cloud and Microsoft Azure. However, Garman believes that this was more imagination than reality. He noted that AWS has long offered successful machine learning services like SageMaker, even before generative AI became a buzzword. He also noted that the corporate has taken a more thoughtful approach to generative AI than perhaps a few of its competitors.

“We were looking at generative AI before it became a widely accepted thing, but I will say that when ChatGPT came out, it was kind of a discovery of a new area and how to apply this technology. I think everyone was excited and energized by it, right? … I think a group of people – our competitors – were kind of racing to put chatbots at the top and show that they are leading the way in generative AI,” he said.

I feel a bunch of individuals – our competitors – were sort of racing to place chatbots on top of every part and show that they were the leader in generative AI.

Instead, Garman said, the AWS team desired to take a step back and take a look at how its customers, whether startups or enterprises, could best integrate the technology into their applications and leverage own, differentiated data. “They will need a platform that they will construct on freely and consider it as a platform to construct on relatively than an application that they will customize. That’s why we took the time to construct this platform,” he said.

In the case of AWS, that platform is Bedrock, where it offers access to a big selection of open and proprietary models. Just doing this – and allowing users to attach different models together – was a bit controversial on the time, he said. “But for us, we thought that was probably where the world was going, and now it was certain that that was where the world was going,” he said. He said he thinks everyone will want custom models and provide their very own data for them.

Garman said the bedrock is “growing like a weed right now.”

One problem with generative AI that it still wants to resolve is price. “A lot of this is doubling down on our custom silicon and making some other changes to the models to draw the conclusion that you’re going to be building (something) much cheaper into your applications.”

Garman said the following generation of AWS’s custom Trainium chips, which the corporate debuted on the re:Invent conference in late 2023, will likely be launched later this 12 months. “I’m really excited that we can really turn this cost curve around and start delivering real value to customers.”

One area where AWS hasn’t necessarily tried to compete with a few of the other tech giants is in constructing its own large language models. When I asked Garman about this, he noted that these are still issues the corporate is “very focused on.” He thinks it is vital for AWS to have its own models while still using third-party models. But he also desires to ensure AWS’s own models can bring unique value and differentiation, either through leveraging its own data or “through other areas where we see opportunities.”

Among these areas of opportunity are costs, but in addition agents, which everyone within the industry seems optimistic about for the time being. “Having models that are reliable, at a very high level of correctness, and can call other APIs and do things. “I think there is some innovation that can be done in this area,” Garman said. He says agents will gain way more utility from generative AI, automating processes on behalf of their users.

Q, a chatbot powered by artificial intelligence

At the recent re:Invent conference, AWS also unveiled Q, its AI-powered generative assistant. Currently, there are mainly two versions of this solution: Q Developer and Q Business.

Q Developer integrates with a lot of the most well-liked development environments and, amongst other things, offers code completion and tools for modernizing legacy Java applications.

“We really think of Q Developer as a broader sense of really helping throughout the developer lifecycle,” Garman said. “I think a lot of early developer tools focused on coding, and we’re thinking more about how do we help with everything that’s painful and labor-intensive for developers?”

At Amazon, teams used Q Developer to update 30,000 Java applications, saving $260 million and 4,500 years of developer labor, Garman said.

Q Business uses similar technologies under the hood, but focuses on aggregating internal company data from many various sources and making it searchable using a ChatGPT-like Q&A service. The company “sees a real driving force there,” Garman said.

Shutting down services

While Garman noted that not much has modified under his leadership, one thing that has happened recently at AWS is that the corporate announced plans to shut down a few of its services. Traditionally, AWS hasn’t done this fairly often, but this summer it announced plans to shut down services just like the Cloud9 web IDE, CodeCommit competitor GitHub, CloudSearch and others.

“It’s sort of a clean-up where we looked at a number of services and either, frankly, introduced a better service that people should move to, or we launched one that we just didn’t get better,” he explained. “And by the way, some of them we just don’t do well, and their traction has been quite poor. We looked at it and said, “You know what? The partner ecosystem actually has a better solution and we intend to build on it.” You cannot put money into every part. You cannot construct every part. We do not like to do that. We take this seriously if firms wish to rely on us to support their activities in the long run. That’s why we’re very careful.”

AWS and the open source ecosystem

One relationship that has long been difficult for AWS – or no less than has been perceived as difficult – is its relationship with the open source ecosystem. This is changing, and just just a few weeks ago AWS contributed its code to the OpenSearch Linux Foundation and the newly formed OpenSearch Foundation.

We love open source. We rely on open source code. I feel we’re attempting to leverage the open source community, making an enormous contribution to the open source community.

“I think our view is pretty simple,” Garman said after I asked him what he thought in regards to the future relationship between AWS and open source software. “We love open source. We rely on open source code. I feel we’re attempting to leverage the open source community, making an enormous contribution to the open source community. I feel that is what open source is all about – benefiting from the community – and that is why we take it seriously.”

He noted that AWS has made key investments in open source software and a lot of its own open source projects.

“Most of the friction has come from firms that originally began open source projects and then decided to sort of decommission them from open source, and I feel they’ve the appropriate to do this. But you recognize, that is not the true spirit of open source. Whenever we see people doing this, take Elastic for instance, and OpenSearch (AWS’ ElasticSearch fork) is sort of popular. … If there’s a Linux (Foundation) project, or an Apache project, or the rest that we will construct on, we would like to construct on that; we contribute to them. I feel we’ve evolved and learned as a company learn how to be good stewards of this community, and I hope that has been noticed by others.

Technology

Students of young, talented and black yale collect $ 3 million on a new application

Nathaneo Johnson and Sean Hargrow, juniors from Yale University, collected $ 3 million in only 14 days to finance their startup, series, social application powered by AI, designed to support significant connections and challenge platforms, similar to LinkedIn and Instagram.

A duo that’s a co -host of the podcast A series of foundersHe created the application after recognizing the gap in the way in which digital platforms help people connect. SEries focuses moderately on facilitating authentic introductions than gathering likes, observing or involvement indicators.

“Social media is great for broadcasting, but it does not necessarily help you meet the right people at the right time,” said Johnson in an interview with Entrepreneur warehouse.

The series connects users through AI “friends” who communicate via IMessage and help to introduce. Users introduce specific needs-are on the lookout for co-founders, mentors, colleagues or investors-AI makes it easier to introduce based on mutual value. The concept attracts comparisons to LinkedIn, but with more personal experience.

“You publish photos on Instagram, publish movies on Tiktok and publish work posts on LinkedIn … And that’s where you have this microinfluuncer band,” Johnson added.

The application goals to avoid the superficial character of typical social platforms. Hargrow emphasized that although aesthetics often dominates on Instagram and the content virus drives tabktok, Number It is intentional, deliberate contacts.

“We are not trying to replace relationships in the real world-we are going to make it easier for people to find the right relationships,” said Hargrow.

Parable projects carried out before the seeded (*3*)Funding roundwhich included participation with Pear VC, DGB, VC, forty seventh Street, Radicle Impact, UNCASMON Projects and several famous Angels Investors, including the General Director of Reddit Steve Huffman and the founder of GPTZERO Edward Tian. Johnson called one meeting of investors “dinner for a million dollars”, reflecting how their pitch resonated with early supporters.

Although not the principal corporations, Johnson and Hargrow based pre-coreneuring through their podcast, through which they interviews the founders and leaders of C-Suite about less known elements of constructing the company-as accounting, business law and team formation.

Since the beginning of the series, over 32,000 messages between “friends” have been mentioned within the test phases. The initial goal of the application is the entrepreneurs market. Despite this, the founders hope to develop in finance, dating, education and health – ultimately striving to construct probably the most available warm network on the earth.

(Tagstranslate) VC (T) Yale (T) Venture Capital (T) Technology (T) APP

Technology

Tesla used cars offers rapidly increased in March

The growing variety of Tesla owners puts their used vehicles on the market, because consumers react to the political activities of Elon Musk and the worldwide protests they were driven.

In March, the variety of used Tesla vehicles listed on the market at autotrader.com increased rapidly, Sherwood News announcedCiting data from the house company Autotrader Cox Automotive. The numbers were particularly high in the last week of March, when on average over 13,000 used Teslas was replaced. It was not only a record – a rise of 67% in comparison with the identical week of the yr earlier.

At the identical time, the sale of latest Tesla vehicles slowed down even when EV sales from other brands increases. In the primary quarter of 2025, almost 300,000 latest EVs were sold in the USA According to the most recent Kelley Blue Book reporta rise of 10.6% yr on yr. Meanwhile, Tesla sales fell in the primary quarter, which is nearly 9% in comparison with the identical period in 2024.

Automaks resembling GM and Hyundai are still behind Tesla. But they see growth growth. For example, GM brands sold over 30,000 EV in the primary quarter, almost double the amount of a yr ago, in line with Kelley Blue Book.

(Tagstranslat) electric vehicles

Technology

Ilya Sutskever uses Google Cloud to supply AI Startup tests

Co -founder and former scientist of Opeli and former primary scientist ILYA SUTSKEVER, SAFE SUPERINTELELENCE (SSI), uses the Google Cloud TPU systems to supply their AI research, partly latest partnership that corporations announced on Wednesday press release.

Google Cloud claims that the SSI uses TPU to “accelerate its research and development to build safe, overintelical artificial intelligence.”

Cloud suppliers chase a handful of AI Unicorn startups, which spend tons of of hundreds of thousands of dollars annually on computing power supply for training AI Foundation models. The SSI agreement with Google Cloud suggests that the primary will spend a big a part of its computing budget with Google Cloud; The well -known source says TechCrunch that Google Cloud is the primary supplier of SSI calculations.

Google Cloud has the history of striking computing agreements with former AI researchers, a lot of which now lead billions of dollars of AI start-ups. (Sutskever once worked on Google.) In October Google Cloud said that he can be the primary supplier of computers for World Labs, founded by the previous scientist Ai Ex-Google Cloud Ai Fei-Feii Li.

It is just not clear whether the SSI has hit the partnership with other cloud or computers suppliers. Google Cloud spokesman refused to comment. A spokesman for a secure superintelligence didn’t immediately answer to the request for comment.

SSI got here out of Stealth in June 2024, months after Sutskever left his role because the primary scientist Opeli. The company has $ 1 billion in support from Andreessen Horowitz, Sequoia Capital, DST Global, SV Angel and others.

Since the premiere of the SSI, we’ve got heard relatively little about startup activities. On his websiteSSI says that the event of secure, super -intellectual AI systems is “our mission, our name and our entire product map, because this is our only goal.” SUTSKEVER He said earlier that he identified the “new mountain to climb” and is investigating latest ways to improve the performance of AI Frontier models.

Before the co -founder of Opeli, Sutskever spent several years on Google Brain examining neural networks. After years of conducting work of security, AI Openai Sutskever played a key role within the overthrow of the overall director of OPENNAI Altman in November 2023. Sutskever later joined the worker’s movement to restore Altman as CEO.

After the Sutskever trial, he was supposedly not seen in Openai offices for months and eventually left the startup to start SSI.

(Tagstransate) ilya SUTSKEVER (T) SSI

-

Press Release1 year ago

Press Release1 year agoU.S.-Africa Chamber of Commerce Appoints Robert Alexander of 360WiseMedia as Board Director

-

Press Release1 year ago

Press Release1 year agoCEO of 360WiSE Launches Mentorship Program in Overtown Miami FL

-

Business and Finance10 months ago

Business and Finance10 months agoThe Importance of Owning Your Distribution Media Platform

-

Business and Finance1 year ago

Business and Finance1 year ago360Wise Media and McDonald’s NY Tri-State Owner Operators Celebrate Success of “Faces of Black History” Campaign with Over 2 Million Event Visits

-

Ben Crump12 months ago

Ben Crump12 months agoAnother lawsuit accuses Google of bias against Black minority employees

-

Theater1 year ago

Theater1 year agoTelling the story of the Apollo Theater

-

Ben Crump1 year ago

Ben Crump1 year agoHenrietta Lacks’ family members reach an agreement after her cells undergo advanced medical tests

-

Ben Crump1 year ago

Ben Crump1 year agoThe families of George Floyd and Daunte Wright hold an emotional press conference in Minneapolis

-

Theater1 year ago

Theater1 year agoApplications open for the 2020-2021 Soul Producing National Black Theater residency – Black Theater Matters

-

Theater10 months ago

Theater10 months agoCultural icon Apollo Theater sets new goals on the occasion of its 85th anniversary