Technology

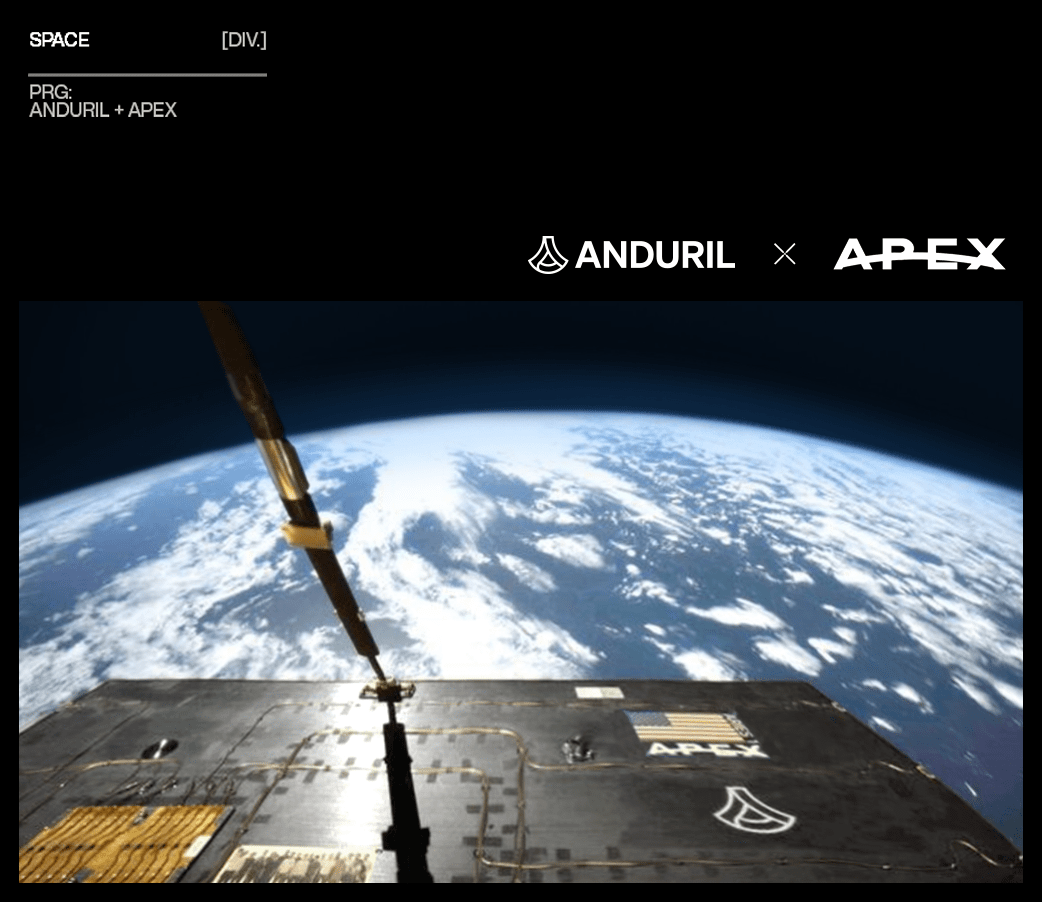

Anduril is accelerating the launch of defensive payloads by purchasing ready-made Apex satellite buses

On the sensor expands even further to the “top level”.

The company, best known for its AI-powered defense products in the air, on land and at sea, is partnering with a satellite bus startup Apical space for the rapid deployment of payloads into orbit for the US Department of Defense.

This is a rare case where an emerging defense contractor decides to partner with a supplier somewhat than construct the product itself or just acquire the supplier outright. But this partnership is smart: Anduril attributes much of its success to its approach to product design and development, which emphasizes rapidly developing large numbers of products using off-the-shelf components to cut back costs. Apex does something similar by producing satellite buses, the part of the spacecraft that holds the payload. In the past, they were subject to individual engineering processes, long lead times and high prices.

“We’re really focused on recreating the same things we’ve done in other areas, in the space domain,” Gokul Subramanian, Anduril’s vp of space and software, said at a press conference. “If you concentrate on what Anduril has done successfully in sea, air and ground transportation, there is a shift from the low-volume, high-cost systems which have traditionally been used to high-volume, low-cost systems. We have the same belief in space – that to achieve success in space, we want to maneuver to high-volume, low-cost production.”

Ian Cinnamon, co-founder and CEO of Apex Space, said the satellite bus is the “biggest bottleneck” in the space ecosystem, stopping America from putting more mass into orbit. Their goal is to deliver satellite buses to customers in weeks, not years, with more transparent pricing and a standardized product.

The Anduril-built payload flew in March on the first-ever Apex mission, which Subramanian called a “mission data processor” that allows on-orbit processing of images captured from the satellite. This payload leverages Lattice, the command and control process implemented in all Anduril products. In summary, Anduril was in a position to reveal the ability to point a spacecraft to a particular location, take an image of what the spacecraft saw, process that image, and transmit the data to Earth – all completely autonomously.

“It was the first experiment that gave us confidence in our vision for space, our collaboration with Ian and the bus platform they built,” he said.

Anduril has already purchased a dedicated satellite bus from Apex, which can be launched next yr. Anduril will operate this method, which can carry payloads built in-house and by others. This can be the model of the future, the pair of executives explained: Apex will provide the buses, Anduril will “mission the system,” Subramanian said.

Subramanian declined to comment on the specific opportunities the company hopes to pursue with the latest partnership, however it leaves the company in a great position to tackle a main contractor role on some coveted contracts. For example, the Space Development Agency’s Proliferated Warfighter Space Architecture program is deploying masses of satellites to enhance the Space Force’s aging missile tracking and defense architecture. SDA spends huge amounts of money on these satellites; So far, contracts for the construction of satellites under the program have been awarded to, amongst others: Sierra Space, Rocket Lab, SpaceX. Anduril undoubtedly hopes to affix the club.

This is not Anduril’s first foray into space: in July 2023, the company won a $10.50 contract from Space Systems Command to include Lattice into Space Surveillance Network (SSN) sensors, used for early warning of missiles. Last week, the company was also awarded a $25.3 million contract from the Space Force to offer additional ANN upgrades.

This is the first of many partnerships Anduril intends to announce, including with other bus providers, Subramanian added.

Technology

The signal is the number one application in the Netherlands. But why?

The application signal for sending a privacy -oriented message flew high in Dutch application stores last month, often sitting at the top as the most steadily downloaded free application for iOS and Android in all categories, for data from many application tracking platforms akin to the sensor tower.

The application has experienced popularity over the years, often in response to Changes in politics in rivals akin to WhatsApp Or Geopolitical events. This is because Signal has set a reputation as a more friendly privacy option-it is served by the non-profit foundation (though based in the USA), not a personal company focused on data earning data. In addition, the signal tracks minimal metadata.

In 2025, along with the recent US president, who strengthened the warm Big Tech hug, it is not surprising that digital privacy tools have a moment – especially in Europe, which attracted the anger of President Trump.

But this time, the meaning of the signal in one very specific place-Holandia is particularly eye-catching.

IN Interview with Dutch newspaper de Telelegraaf last week, President signal Meredith Whittaker He noticed that the number of “new registrations” in the Netherlands was 25 this 12 months, even though it is not clear what the exact comparative period for this data is.

Asked why the Netherlands recorded such development, Whittaker pointed to the combination of things: “growing awareness of privacy, distrust of large technology and political reality in which people realize how sensitive digital communication can be,” said Whittaker.

Data provided to TechCrunch from the application intelligence company Appfigures Increase in Signal Signal in the Netherlands. According to its data, the signal was 365. Among the applications apart from the iPhone in the Netherlands on January 1 and didn’t appear on the list of the most significant general applications. Then, from around January 5, he began to climb the rankings, reaching the highest position until February 2.

The signal immersed and comes out of the lead during weeks, spending around mid -February at the top – including every single day from February 22. By digging deeper into the data, the AppFigures estimates that the total download in Apple and Google Applets in total in December 2024 jumped to 99,000 in January and increased to 233,000 to February – 958%.

While a part of this height could be assigned to a lower saturation signal than other markets, a continuing application position at the top in comparison with neighboring markets of comparable size.

“No other markets are approaching the Netherlands in terms of growth between December and February,” said AppFigures Techcrunch.

For comparison, from December in Belgium, download increased by over 250%, Sweden by 153%and dishes by 95%.

So why the signal can experience what one redditor called “The moment of mass adoption“In the Netherlands?

Clear signal

Give ZengerSenior Policy Advisor at Dutch Digital Rights Foundation Fragments of freedomHe said that even though it is difficult to point one specific reason, he is not surprised.

The last changes in the US have seen Large platform suppliers Adapt with the recent Trump administration, and this has retained a major public and media debate. Relying Europe from the technology of big private American corporations has turn out to be the point of interest of this debate.

“The Dutch are, like many others, very dependent on the infrastructure provided by extremely dominant technology companies, mainly from the USA,” said Zenger. “What does this mean, and the risk that results from it has been nicely demonstrated in the last few weeks. As a result, the public debate in the Netherlands was relatively sharp. Where in the past this problem was discussed only at the level “:” I feel that we are now conducting a debate at the higher levels: “.

In this context, society can mix dominance with data protection abuse. Since corporations akin to meta are frequently studied and fined in the field of information privacy practices, the signal could appear to be less evil: it is based on the US, but supported by a non-profit organization, which ensures encryption of each the content of the message and around it.

Vincent BöhreDirector of the Dutch Organization of Privacy Privacy firstHe also pointed to increased media relationships and a wider change of public opinion.

“Since a few months ago he was re-elected in the United States, in the Dutch-and European media, which seem to support Trump, there were many” Elon) Muska. “Articles criticizing X (previously Twitter) and Meta appear everywhere in the Dutch media, which leads to a change in Dutch public opinion: even people who have never really known or cared for privacy and security in social media, suddenly became interested in” friendly privacy “alternative, in particular the signal.”

Signal of intentions

While the Netherlands is only one market of 18 million people in the European population over 700 million, its increase in adoption can signal a wider trend throughout the continent, especially when governments try to cut back privacy barriers.

For example, Apple has recently pulled out comprehensive encryption from iCloud in Great Britain to counteract government efforts to put in a backdoor.

Speech Fr. Rightcon 25 In Taiwan, this week, Whittaker confirmed the unwavering Signal attitude regarding privacy.

“Signal position on this subject is very clear- we will not walk, falsify or otherwise disturb the solid guarantees of privacy and security that people rely on” Said Whittaker. “Regardless of whether this disturbance or backdoor is called scanning on the client’s side or removing the protection of encryption against one or the other, the features similar to what Apple has been forced to do in Great Britain”

Separately, in Interview with Swedish public broadcaster, Whittaker said that Signal wouldn’t follow the proposed Swedish law requiring application to send messages for storage.

“In practice, this means asking us to break encryption, which is the basis of our entire activity,” said Whittaker. “Asking us to store data would undermine all our architecture and we would never do it. We would prefer to completely leave the Swedish market. “

TechCrunch contacted to signal a comment, but he didn’t hear during the publication.

(Tagstotranslat) signal of the Netherlands

Technology

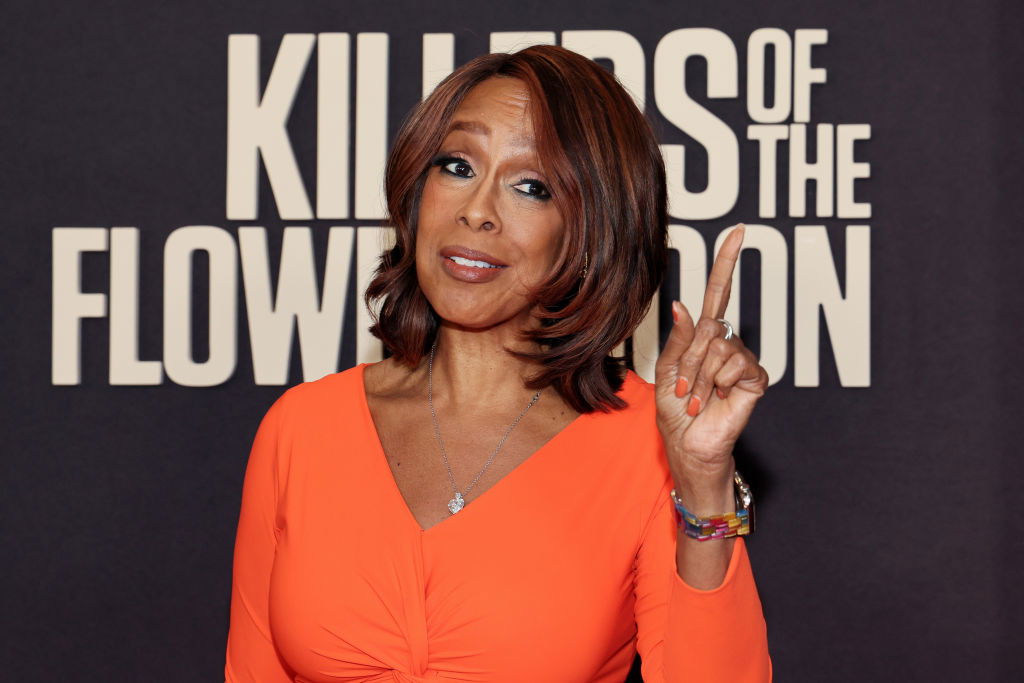

Gayle King announces participation in the space mission of all women

Gayle King will join the thirty first Blue Origin civil flight into space.

Gayle King announced that he was going to space. The host of the talk show during the day provided messages CBS MORNINGS.

King revealed Her participation in the thirty first Blue Origin flights, NS-31. Before discussing the details of the mission, she and her co -lecturers presented the video editing, which described her long -term fascination with travel travel.

In one clip, King said: “I am excited to watch the premiere at home in my pajamas.”

Her enthusiasm led to an invite with Blue Origin. The television personality will disappear from Crew from the whole familyIncluding an award -winning journalist Lauren Sánchez, award -winning Grammy singer Katy Perry and astronaut Aish Bowe.

Soon the explorer of the space admitted that she was hesitating at first.

“I don’t know how to explain at the same time terrified and excited,” said King.

To make a choice, King turned to a gaggle of family members, including her children and a detailed friend, Oprah Winfrey. She said that when her most trusted confidants approved, she was ready.

“When Kirby, Will and Oprah were fine, I was fine,” said King. “I thought Oprah would say no. She said: “I feel that when you don’t do it, if you all come back and also you had the opportunity to do it, you’ll kick.” She is right. “

King is not going to be the first television host who wandered into space with blue origin. In 2021, then-Good morning America Coheat Michael Strahan took part in the third civil flight Blue Origin. The former NFL star and the sender was delighted after returning, expressing how this experience gave him a brand new “perspective” in the world.

“I want to come back,” said Strahan.

Blue origin, Founded by Amazon Billionaire Jeff Bezos in 2000 is a non-public aviation company that focuses on sharing space travels for civilians and developing technology to explore the space long.

The upcoming flight of the king New Shepard It will probably be part of Blue Origin’s constant efforts to normalize civil space travel.

Technology

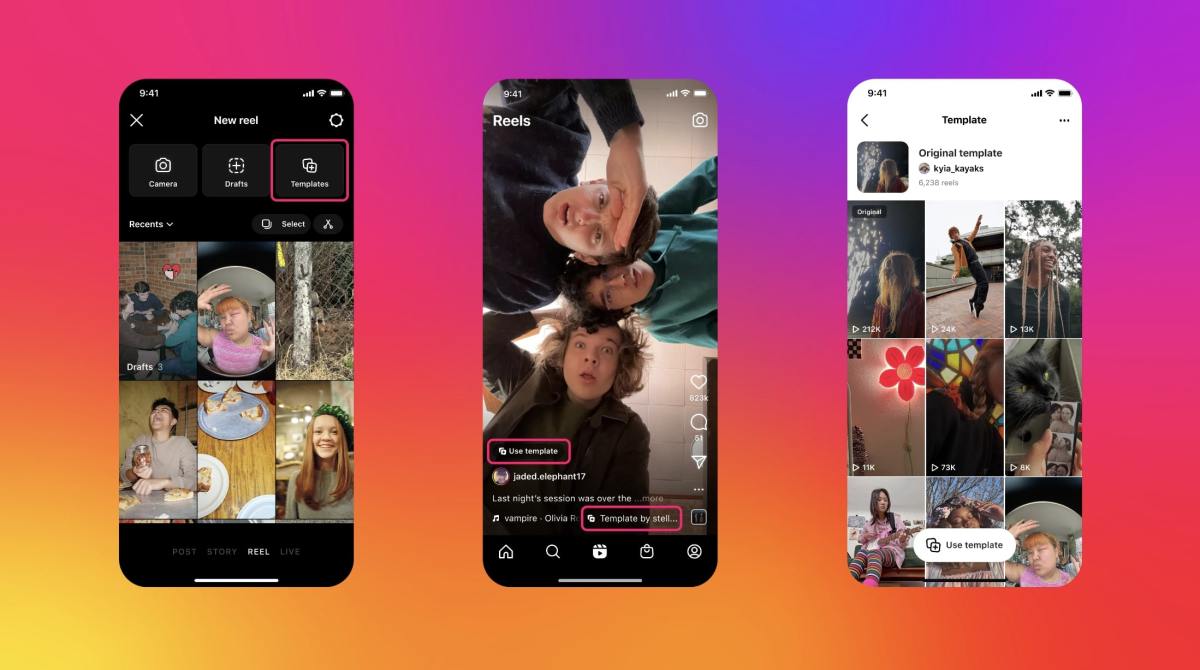

Instagram can turn the rollers in a separate application

Meta is occupied with an independent application for brief movies, Information He informed, citing an anonymous source, which he heard the boss on Instagram Adam Mosseri talked about the personnel project.

The project is reportedly called RAY code, which goals to enhance recommendations for brand new users and existing users in the US and to conclude one other three minutes of movies, the report quoted the source.

The finish line didn’t answer immediately at the request for comment.

Last month, the company announced a video editing application called Edyta to compete with Capcut (belonging to Tiktok Matter Company Bytedance) since it was geared toward using the uncertain future Tiktok and Bytedance in the USA

Currently, the Instagram channel is a mixture of photos, movies (drums) and stories. However, many users imagine that the application has been cluttered since it incorporates movies and not persist with the roots as an application for sharing photos. If the company rotates in an independent application for brief movies, it can create a possibility for Instagram to emphasise other functions.

Instagram began at the starting of this yr paying creators To promote Instagram on other platforms, resembling Tiktok, Snapchat and YouTube. Apparently he also began to supply Big money for the creators Present only on roller skates.

(Tagstranslate) Instagram

-

Press Release11 months ago

Press Release11 months agoCEO of 360WiSE Launches Mentorship Program in Overtown Miami FL

-

Press Release11 months ago

Press Release11 months agoU.S.-Africa Chamber of Commerce Appoints Robert Alexander of 360WiseMedia as Board Director

-

Business and Finance9 months ago

Business and Finance9 months agoThe Importance of Owning Your Distribution Media Platform

-

Business and Finance11 months ago

Business and Finance11 months ago360Wise Media and McDonald’s NY Tri-State Owner Operators Celebrate Success of “Faces of Black History” Campaign with Over 2 Million Event Visits

-

Ben Crump11 months ago

Ben Crump11 months agoAnother lawsuit accuses Google of bias against Black minority employees

-

Theater11 months ago

Theater11 months agoTelling the story of the Apollo Theater

-

Ben Crump12 months ago

Ben Crump12 months agoHenrietta Lacks’ family members reach an agreement after her cells undergo advanced medical tests

-

Ben Crump12 months ago

Ben Crump12 months agoThe families of George Floyd and Daunte Wright hold an emotional press conference in Minneapolis

-

Theater11 months ago

Theater11 months agoApplications open for the 2020-2021 Soul Producing National Black Theater residency – Black Theater Matters

-

Theater9 months ago

Theater9 months agoCultural icon Apollo Theater sets new goals on the occasion of its 85th anniversary