Technology

Google Gemini: everything you need to know about the new generative artificial intelligence platform

Google is trying to impress with Gemini, its flagship suite of generative AI models, applications and services.

So what are Gemini? How can you use it? And how does it compare to the competition?

To help you sustain with the latest Gemini developments, we have created this handy guide, which we’ll keep updating as new Gemini models, features, and news about Google’s plans for Gemini grow to be available.

What is Gemini?

Gemini is owned by Google long promised, a family of next-generation GenAI models developed by Google’s artificial intelligence labs DeepMind and Google Research. It is available in three flavors:

- Gemini Ultrathe best Gemini model.

- Gemini Pro“lite” Gemini model.

- Gemini Nanoa smaller “distilled” model that works on mobile devices like the Pixel 8 Pro.

All Gemini models were trained to be “natively multimodal” – in other words, able to work with and use greater than just words. They were pre-trained and tuned based on various audio files, images and videos, a big set of codebases and text in various languages.

This distinguishes Gemini from models akin to Google’s LaMDA, which was trained solely on text data. LaMDA cannot understand or generate anything beyond text (e.g. essays, email drafts), but this isn’t the case with Gemini models.

What is the difference between Gemini Apps and Gemini Models?

Image credits: Google

Google, proving once more that it has no talent for branding, didn’t make it clear from the starting that Gemini was separate and distinct from the Gemini web and mobile app (formerly Bard). Gemini Apps is solely an interface through which you can access certain Gemini models – consider it like Google’s GenAI client.

Incidentally, Gemini applications and models are also completely independent of Imagen 2, Google’s text-to-image model available in a few of the company’s development tools and environments.

What can Gemini do?

Because Gemini models are multimodal, they will theoretically perform a spread of multimodal tasks, from transcribing speech to adding captions to images and videos to creating graphics. Some of those features have already reached the product stage (more on that later), and Google guarantees that each one of them – and more – can be available in the near future.

Of course, it is a bit difficult to take the company’s word for it.

Google seriously fell in need of expectations when it got here to the original Bard launch. Recently, it caused a stir by publishing a video purporting to show the capabilities of Gemini, which turned out to be highly fabricated and kind of aspirational.

Still, assuming Google is kind of honest in its claims, here’s what the various tiers of Gemini will give you the option to do once they reach their full potential:

Gemini Ultra

Google claims that Gemini Ultra – thanks to its multimodality – may help with physics homework, solve step-by-step problems in a worksheet and indicate possible errors in already accomplished answers.

Gemini Ultra can be used for tasks akin to identifying scientific articles relevant to a selected problem, Google says, extracting information from those articles and “updating” a graph from one by generating the formulas needed to recreate the graph with newer data.

Gemini Ultra technically supports image generation as mentioned earlier. However, this feature has not yet been implemented in the finished model – perhaps because the mechanism is more complex than the way applications akin to ChatGPT generate images. Instead of passing hints to a picture generator (akin to DALL-E 3 for ChatGPT), Gemini generates images “natively” with no intermediate step.

Gemini Ultra is accessible as an API through Vertex AI, Google’s fully managed platform for AI developers, and AI Studio, Google’s online tool for application and platform developers. It also supports Gemini apps – but not totally free. Access to Gemini Ultra through what Google calls Gemini Advanced requires a subscription to the Google One AI premium plan, which is priced at $20 monthly.

The AI Premium plan also connects Gemini to your broader Google Workspace account—think emails in Gmail, documents in Docs, presentations in Sheets, and Google Meet recordings. This is useful, for instance, when Gemini is summarizing emails or taking notes during a video call.

Gemini Pro

Google claims that Gemini Pro is an improvement over LaMDA by way of inference, planning and understanding capabilities.

Independent test by Carnegie Mellon and BerriAI researchers found that the initial version of Gemini Pro was actually higher than OpenAI’s GPT-3.5 at handling longer and more complex reasoning chains. However, the study also found that, like all major language models, this version of Gemini Pro particularly struggled with math problems involving several digits, and users found examples of faulty reasoning and obvious errors.

However, Google promised countermeasures – and the first one got here in the type of Gemini 1.5 Pro.

Designed as a drop-in substitute, Gemini 1.5 Pro has been improved in lots of areas compared to its predecessor, perhaps most notably in the amount of information it could actually process. Gemini 1.5 Pro can write ~700,000 words or ~30,000 lines of code – 35 times greater than Gemini 1.0 Pro. Moreover – the model is multimodal – it isn’t limited to text. Gemini 1.5 Pro can analyze up to 11 hours of audio or an hour of video in various languages, albeit at a slow pace (e.g., looking for a scene in an hour-long movie takes 30 seconds to a minute).

Gemini 1.5 Pro entered public preview on Vertex AI in April.

An additional endpoint, Gemini Pro Vision, can process text images – including photos and videos – and display text according to the GPT-4 model with Vision OpenAI.

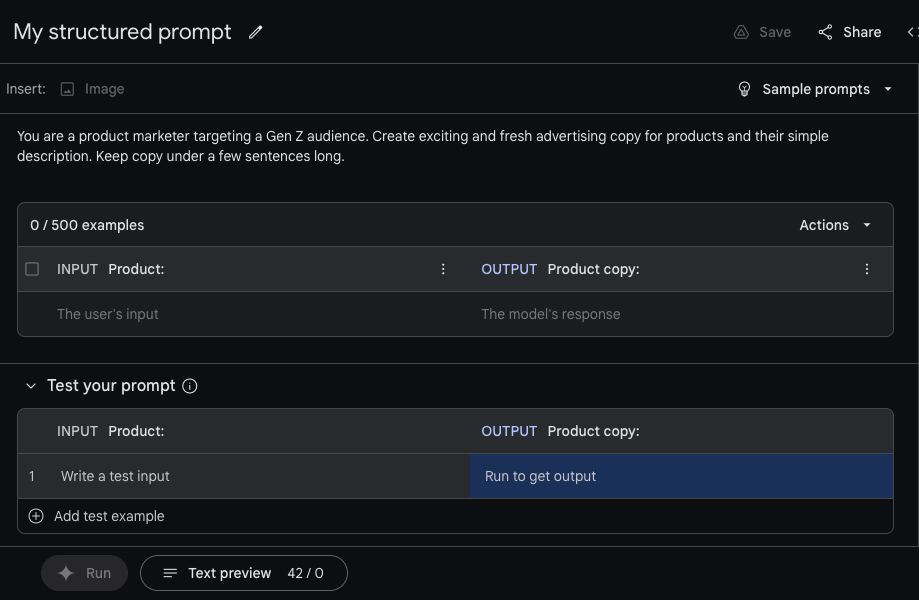

Using Gemini Pro with Vertex AI. Image credits: Twins

Within Vertex AI, developers can tailor Gemini Pro to specific contexts and use cases through a tuning or “grounding” process. Gemini Pro can be connected to external third-party APIs to perform specific actions.

AI Studio includes workflows for creating structured chat prompts using Gemini Pro. Developers have access to each Gemini Pro and Gemini Pro Vision endpoints and might adjust model temperature to control creative scope and supply examples with tone and elegance instructions, in addition to fine-tune security settings.

Gemini Nano

The Gemini Nano is a much smaller version of the Gemini Pro and Ultra models, and is powerful enough to run directly on (some) phones, slightly than sending the job to a server somewhere. So far, it supports several features on the Pixel 8 Pro, Pixel 8, and Samsung Galaxy S24, including Summarize in Recorder and Smart Reply in Gboard.

The Recorder app, which allows users to record and transcribe audio with the touch of a button, provides a Gemini-powered summary of recorded conversations, interviews, presentations and more. Users receive these summaries even in the event that they do not have a signal or Wi-Fi connection available – and in a nod to privacy, no data leaves their phone.

Gemini Nano can be available on Gboard, Google’s keyboard app. There, it supports a feature called Smart Reply that helps you suggest the next thing you’ll want to say while chatting in the messaging app. The feature initially only works with WhatsApp, but can be available in additional apps over time, Google says.

In the Google News app on supported devices, the Nano enables Magic Compose, which allows you to compose messages in styles akin to “excited”, “formal”, and “lyrical”.

Is Gemini higher than OpenAI’s GPT-4?

Google has had this occur a number of times advertised Gemini’s benchmarking superiority, claiming that Gemini Ultra outperforms current state-of-the-art results on “30 of 32 commonly used academic benchmarks used in the research and development of large language models.” Meanwhile, the company claims that Gemini 1.5 Pro is best able to perform tasks akin to summarizing content, brainstorming, and writing higher than Gemini Ultra in some situations; it will probably change with the premiere of the next Ultra model.

However, leaving aside the query of whether the benchmarks actually indicate a greater model, the results that Google indicates appear to be only barely higher than the corresponding OpenAI models. And – as mentioned earlier – some initial impressions weren’t great, each amongst users and others scientists mentioning that the older version of Gemini Pro tends to misinterpret basic facts, has translation issues, and provides poor coding suggestions.

How much does Gemini cost?

Gemini 1.5 Pro is free to use in Gemini apps and, for now, in AI Studio and Vertex AI.

However, when Gemini 1.5 Pro leaves the preview in Vertex, the model will cost $0.0025 per character, while the output will cost $0.00005 per character. Vertex customers pay per 1,000 characters (roughly 140 to 250 words) and, for models like the Gemini Pro Vision, per image ($0.0025).

Let’s assume a 500-word article incorporates 2,000 characters. To summarize this text with the Gemini 1.5 Pro will cost $5. Meanwhile, generating an article of comparable length will cost $0.1.

Pricing for the Ultra has not yet been announced.

Where can you try Gemini?

Gemini Pro

The easiest place to use Gemini Pro is in the Gemini apps. Pro and Ultra respond to queries in multiple languages.

Gemini Pro and Ultra are also available in preview on Vertex AI via API. The API is currently free to use “within limits” and supports certain regions including Europe, in addition to features akin to chat and filtering.

Elsewhere, Gemini Pro and Ultra might be present in AI Studio. Using this service, developers can iterate on Gemini-based prompts and chatbots after which obtain API keys to use them of their applications or export the code to a more complete IDE.

Code Assistant (formerly AI duo for programmers), Google’s suite of AI-based code completion and generation tools uses Gemini models. Developers could make “large-scale” changes to code bases, akin to updating file dependencies and reviewing large snippets of code.

Google has introduced Gemini models in its development tools for the Chrome and Firebase mobile development platform and database creation and management tools. It has also introduced new security products based on Gemini technology, e.g Gemini in Threat Intelligence, a component of Google’s Mandiant cybersecurity platform that may analyze large chunks of probably malicious code and enable users to search in natural language for persistent threats or indicators of compromise.

Technology

Kai Cenat teases his University of Streamer, but some influentially warn of the defect in creating content

Twitch Megastar Kai Cenat confirmed that his once historical “Streamer University” is officially starting, a number of months after the first raising of the concept during the live broadcast of 2025. While the idea already generates noise amongst aspiring creators who’re comfortable to equalize their content of content, some are concerned about the fee for the full -time lifestyle.

According to the price trailer announcing his Streamer University contained a sentence At Hogwarts, a university, which is the scenery of a preferred film and film franchise. In the film, Cenat writes letters to potential streamers, informing them about their selection to the university.

Welcome To Streamer University

Enroll Now! pic.twitter.com/6vU1nBsW9E— AMP KAI (@KaiCenat) May 6, 2025

“I am excited that I can introduce you to the most sincere welcome at the first class of Streamer University,” said Cenat. “Here you will find a school where chaos is encouraged and the content is a king … I can’t wait to see you all in the campus for the first semester.”

The original Cenat idea consisted of renting a brick university and mortar to rearrange his classes, but details about these specific logistics, the same to location, dates or exchange materials have not yet been announced; But earlier, he raised the concept that other content creators, the same to Mrbeast or Mark Rober, helping to point free university classes.

The Cenata website, which he created for potential content creators, says that “streamers of all environments” can learn “both unrealized, upcoming and recognized creators.”

However, Mrbeast, which didn’t confirm his commitment, recently warned about the drawback of creating content during the February interview about Steven Bartlett’s podcast.

“If my mental health were a priority, I would not be as successful as I did,” said Bartlett during the discussion.

According to Shira Lazar, co -founder of Creatorcare, a newly launched Soffee service, which goals to help the creators of content in matters of mental health specific to their occupation, often content creators Fight fear, Depression and disordered food, in addition to income fluctuations.

“() Fear of the disappearance of burning fuels in a system, which constantly requires feeding channels. I am like Joan Rivers; I will create until I die, so I want to make sure that I can develop,” said Lazar in an interview.

Amy Kelly, a co -founder of audit health therapy and a licensed family therapist, whose clients consist of many content creators, said The Outlet said that the influencers industry itself just just won’t be built to take care of the creators who feed her.

“Social media is not only a platform – it’s a recruiter,” she said, as she noticed, that 57% of teenagers gene with in the USA He said they’d turn into influential If he receives a likelihood. “We cultivate teenagers in a digital working force with proven threats to mental health – a modern equivalent of sending children to a coal mine without protective equipment.”

As Lazar said in an interview: “The creator’s economy exploded, but the support systems did not meet. Because more gene from this space is professionally entering, we must treat it like a real workplace. This means sustainable systems not only for monetization, but also for mental health.”

)

Technology

10 gadgets that a mother will love about technology

Mama list can handle it.

Mother’s Day is a great time to rejoice mothers and amazing women who raised us. However, day-to-day is a great day to honor them, because mothers are really a gift that he gives.

If your mother is a technical type that on a regular basis seems to know about essentially essentially essentially essentially essentially essentially essentially essentially probably probably essentially essentially probably essentially essentially probably essentially probably probably probably probably probably probably essentially probably essentially probably essentially probably essentially essentially probably probably probably probably probably probably essentially essentially essentially probably essentially essentially essentially probably probably probably probably essentially probably probably essentially essentially probably essentially probably probably probably essentially probably probably essentially essentially probably essentially essentially essentially probably probably probably essentially probably probably essentially essentially probably probably probably probably essentially essentially essentially probably probably probably probably essentially essentially essentially essentially essentially essentially probably probably probably probably probably essentially essentially essentially probably probably probably probably probably probably essentially probably essentially probably essentially essentially essentially essentially probably probably probably essentially probably probably probably essentially essentially probably essentially essentially essentially probably probably probably essentially probably essentially essentially essentially probably probably essentially essentially essentially probably probably essentially probably essentially essentially probably essentially essentially essentially essentially probably essentially essentially probably essentially probably essentially essentially probably probably essentially probably probably probably probably essentially essentially probably probably probably probably essentially essentially probably essentially essentially essentially essentially probably essentially essentially probably essentially probably probably essentially probably probably probably essentially essentially probably essentially probably probably probably essentially probably probably probably essentially probably essentially essentially probably essentially essentially probably essentially essentially essentially probably probably probably essentially probably probably probably probably essentially probably probably probably probably essentially essentially probably essentially essentially essentially essentially probably the most recent gadget and downloads latest applications or updates her home to an intelligent home that practically is run, here is a list of gadgets that you will offer you the likelihood to develop. From those fanciful Wellness to kitchen gadgets, which will move her cooking game to a higher level, these types will bring you the crown of a “favorite child” on Mother’s Day or day-to-day.

Ember cup 2

Watch on TikTok

Smart MUG 2 Ember Tempeime Control is number of wonderful – this keeps drinks at a great temperature For about an hour and a half. While the cup is a bit thrown out for USD 149.95, it’s truthfully price it for mothers who’re still in motion. If you are trying to pay money for a gift that you truly use, you will offer you the likelihood to download one among the company’s sites. It is a game that changes for tea or coffee lovers.

Roomba + 405 Combo Robot

Robot Combo Roomba Plus 405 with Dock AutoWash costs $ 599. This genius gadget not only vacuum and mops, but actually creates a map of his home and empties/cleans after ending.

Aura Carver Digital Photo Frame

The digital photo frame Aura Carver lasts for 149 USD and, as a thoughtful gift for mom, allows her to see useful family moments in a sublime, stylish frame without a mess of traditional albums or web platforms. What makes it unique is a way that everyone can remotely add photos through the applying, so the mother’s collection stays updated about latest memories. He can control how briskly slide plays or simply move.

TheRagun Mini

The latest TheRagun Mini (third generation) costs 179 USD and is such a thoughtful gift for mom. It provides serious muscle relief that he can throw straight into the purse. This is definitely a pocket tool He deals with stubborn painIt melts tension and helps her decompressed after a long day. This little gem has it back.

Tile Pro

https://www.tiktok.com/@_mettaz_/video/7433622090697231662 ?_R=1&_T=ZT-8WGNGAV95TTT

Tile Tile Pro Bluetooth costs 99 USD, and likewise you will offer you the likelihood to catch one on the Life360 website. Why is it such a perfect gift for mom? Well, that is a lifeguard when he’s Searching for her keys or lost her purse or cannot find these other needed items. Mom can simply use her phone to trace them in a few seconds.

Smart Garden 9 Pro

https://www.tiktok.com/@jesisdabest08/video/69537423724087045 ?_R=1&_T=ZT-8WGNOX1XZAQ

Smart Garden 9 Pro costs $ 299.95 and is sweet for mothers who’re excited about cooking with fresh herbs or who Enjoy gardening But I hate seasonal restrictions. This gadget does almost everything-in height, it robotically provides amongst the varied finest possible quantity of sunshine with these prolons and provides adequate nutrients to the roots. Lighting functions controlled by the applying allow it to manage the schedule with none confusion. She will be excited when she will offer you the likelihood to grow herbs all yr round, even contained contained throughout the midst of winter! Never more sad, withered herbs of a grocery store or a broken heart of gardening when frost strikes.

Apple Watch Series 10

https://www.tiktok.com/@jarendivinity/video/745877349853936430 ?_R=1&_T=ZT-8WGNWGOQJBO

I just noticed Apple Watch Series 10 for $ 399. This is sweet for Have bookmarks on health informationMessage handling without searching the phone and exposing it to remain energetic. It works well for busy mothers who care about their well -being, but they don’t on a regular basis find time.

Apple Airpods 4

Airpods 4 could possibly be amongst the varied finest possible gift for Mother’s Day. Mom would love their amazing sound quality and the cancellation of the noise that allows her Use chaos when he has to. They work like magic also with all its Apple devices in retail for $ 179. Regardless of whether he gets into your favorite melodies, receives connections or wander off in podcasts, these small ear inserts are delivered. Oh, and likewise you will offer you the likelihood so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as in order so as to add a personal accent, engraving it together together along together along along together together along along along together along along along together along together together together along along along along together along together along along along along together together together along along together together together together together together together along along along together along along together together together together together together along along together along along together together along together together together along along together together together together along along together together together along along together together along together along along together together together along along along together along along along along along together along together along along together along together along along along along together along together together along along along together together along along along together along together together along along along together together along along together together together together along along together together along along together together along together together together along along along along along together along together together together together along along together along along together together along along along together together along together together along together together together along together along along along along together along together together together together along together together together together together together along along along along along together together along together together along along together along along along along along together along along along together along together together along along along together together along along together together together together together along with her name or sweet message.

Philips Hue Smart Light Starter Set

https://www.tiktok.com/@rustandtrust/video/7479953867074047278 ?_R=1&_T=ZT-8WGO3NCA0P0

Check the Philips Hue Smart Light starter kit for mom, starting from 199 USD. It is sweet for mothers who deserve a little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little little bit of magic of their day-to-day lives. She would love the potential of adjusting the mood by moving light colors Transform the atmosphere of each room. The voice control function allows mom to manage the lighting without getting up from a comfortable place. In addition, there’s a Bluetooth control directly from mobile devices. Trust me, nothing says “I appreciate you”, very very comparable to the gift of atmosphere and convenience, in a single.

Levoit Core 600s Smart Air Ofurifier

Smart Air Purifier Levoit Core 600s currently costs USD 299 at levoit.com. Perfect for a mother who deserves easy respiratory. It changes games for mothers coping with allergies Or who’s special to handle up your personal home in health. It is admittedly cool that it could thoroughly be controlled using application or voice commands.

Technology

23andme customers informed about bankruptcy and potential claims – the deadline is July 14

23andme, an infinite of genetic tests, which has been priced on billions, is now moving in bankruptcy in chapter 11 and will notify an incredible deal of and an incredible deal of of current and former clients that they is perhaps entitled to make claims as a component of the restructuring process. The company and 11 of its subsidiaries, including Lemonaid Health and LPRXONE, submitted an application for bankruptcy protection on March 23 this yr in the eastern Missouri district. Customers were notified on Sunday to July 14 of July 14 about claims for losses.

Bankruptcy occurs after a storm of 18 months for 23ndme, marked with a decrease in sales, managerial departures and destructive violation of information, which violated confidential personal data of virtually 7 million users. Violation, publicly disclosed October 2023According to TechCrunch, names, birth years, relationship labels, DNA percentage, participation with relatives, ancestors’ reports and locations. Fallout caused many collective processes and a wave of distrust of customers, which seriously cuts the company coping with the company’s consumers.

Now customers that affected this violation – particularly imposed by 23andme that their information has been violated between May and October 2023 – they’ll submit so -called Civil security incident claim. Those who’ve suffered financial damage or others on account of the violation may make a claim inside the bankruptcy case. Customers with other varieties of complaints not related to cyber attack, paying homage to problems with the results of a DNA test or a teeth service in the company, can submit a separate claim in accordance with General bar date package.

Congress also expressed concerns about the consequences of bankruptcy privacy.

The fall of 23andme was fast by grace, and his misfortunes were intensified by her ambitious but expensive extension in digital health and telemedicine, including $ 400 million The takeover of Lemonaid Health in 2021 was originally aimed toward diversifying 23andme offers, apart from testing consumer DNA, movements tensed 23andme financial resources and didn’t provide the growth needed by the company.

Proposed settlement in the amount of $ 30 million in a related collective lawsuit over a cyber attack stays Due to bankruptcy proceedings. (23andme lawyers claim that the settlement is now disputed when the company is in bankrupt.) Customers who must handle up the right to compensation must provide formal evidence of the claim regardless of their participation in a collective motion.

TechCrunch contacted 23andme to comment.

TechCrunch event

Berkeley, California

|.

June 5

Book now