Technology

Lightmatter’s $400 million round targets AI hyperscalers for photonic data centers

Launch of photonic calculations Light matter raised $400 million to wide open one among the bottlenecks of contemporary data centers. The company’s optical interconnect layer enables lots of of GPUs to operate synchronously, streamlining the expensive and complicated work of coaching and running artificial intelligence models.

The rise of artificial intelligence and correspondingly massive computing demands have supercharged the data center industry, nevertheless it’s not so simple as plugging in one other thousand GPUs. As high-performance computing experts have known for years, it doesn’t matter how briskly each node on a supercomputer is that if those nodes sit idle half the time waiting for data to reach.

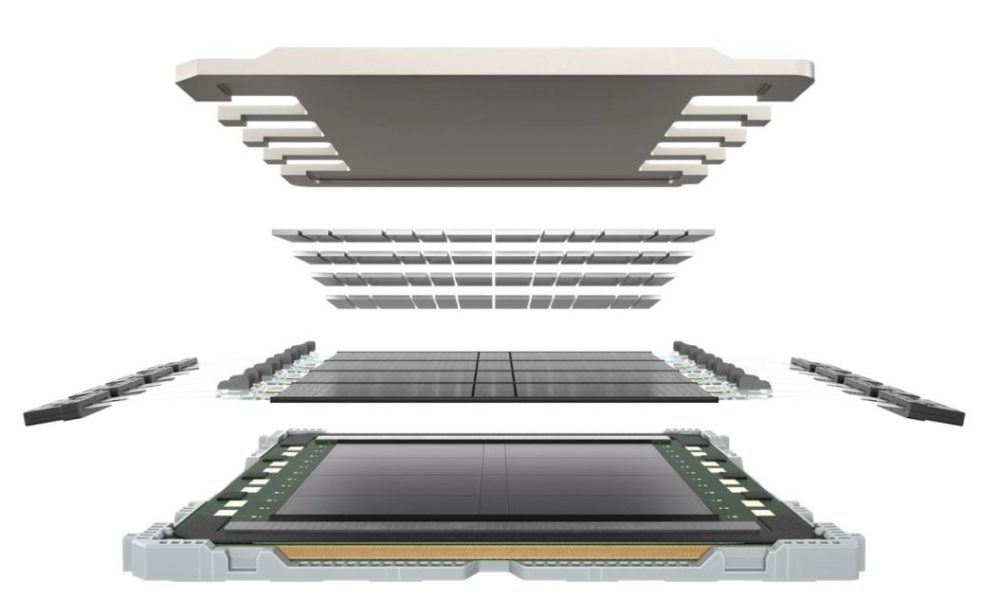

The interconnection layer or layers actually turn racks of CPUs and GPUs into one giant machine – the implication is that the faster the interconnection, the faster the data center. It looks like Lightmatter will certainly construct the fastest interconnection layer using photonic chips it has been working on since 2018.

“Hyperscale people know that if they want a million-node computer, they can’t do it with Cisco switches. Once it leaves the rack, we go from a high-density interconnect to basically a solid chunk,” Nick Harris, the corporate’s CEO and founder, told TechCrunch. (You can see a brief speech he gave summarizing this issue Here.)

He said the state-of-the-art solution is NVLink, specifically the NVL72 platform, which consists of 72 Nvidia Blackwell units interconnected in a rack, allowing for a maximum of 1.4 exaFLOPs with FP4 precision. However, no closet is an island and all of the processing power have to be squeezed through the 7 terabytes of a scale-up network. This seems like loads, and it’s, but the lack to attach these units to one another and to other racks more quickly is one among the principal obstacles to improving efficiency.

“For a million GPUs, you need multiple layers of switches. and that creates a huge delay burden,” Harris said. “You have to go from electrical to optical, electrical to optical… the amount of energy used and the wait time are huge. In larger clusters the situation becomes dramatically worse.”

So what does Lightmatter bring to the table? Fiber. Lots of fibers routed through a purely optical interface. Up to 1.6 terabits per fiber (using multiple colours) and as much as 256 fibers per chip… well, let’s just say 72 GPUs at 7 terabytes starts to sound positively quaint.

“Photonics is coming much faster than people thought — people have been struggling for years to get it off the ground, but we made it happen,” Harris said. “After seven years of absolutely murderous hard work,” he added.

The photonic interconnect currently available from Lightmatter provides 30 terabytes of bandwidth, while rack-mount optical cabling enables 1,024 GPUs to operate synchronously in specially designed racks. In case you are wondering, these two numbers don’t increase by similar aspects because most tasks that will require a network connection to a different rack could be performed on a rack in a cluster with a thousand GPUs. (And anyway, 100 terabits are on the way in which.)

Harris noted that the market for this is big, with every major data center company, from Microsoft to Amazon and newer entrants like xAI and OpenAI, showing an infinite appetite for computing. “They connect buildings together! I ponder how long they will stick with it,” he said.

Many of those hyperscalers are already customers, though Harris would not name any of them. “Think of Lightmatter a bit like a foundry, like TSMC,” he said. “We don’t pick favorites or associate our name with other people’s brands. We provide them with a roadmap and a platform – we just help them grow the dough.”

However, he added sheepishly, “you can’t quadruple the valuation without using this technology” – perhaps an allusion to OpenAI’s recent funding round that valued the corporate at $157 billion, however the remark could just as easily apply to his own company.

This $400 million D round values it at $4.4 billion, an identical multiple to its mid-2023 valuation that “makes us by far the largest photonics company. That’s great!” Harris said. The round was led by T. Rowe Price Associates, with participation from existing investors Fidelity Management and Research Company and GV.

What’s next? In addition to interconnects, the corporate is developing latest substrates for chips that can enable them to perform much more intimate, when you will, networking tasks using light.

Harris speculated that beyond the interconnect, the principal differentiator in the longer term could be power per chip. “In ten years, everyone will have wafer-sized chips – there is simply no other way to improve the performance of each chip,” he said. Cerebras is, after all, already working on this, even though it stays an open query whether at this stage of technology it’ll be possible to capture the true value of this progress.

But Harris, seeing the chip industry facing a wall, plans to be ready and waiting for the following step. “Ten years from now, we’ll put Moore’s Law together,” he said.