Crime

Cops start using AI chatbots to write crime reports, despite concerns about racial bias in AI technology

OKLAHOMA CITY (AP) — A body camera captured every word and bark uttered by police Sergeant Matt Gilmore and his K-9 dog, Gunner, as they looked for a bunch of suspects for nearly an hour.

Normally, the Oklahoma City police sergeant would grab his laptop and spend the subsequent 30 to 45 minutes writing a search report. But this time, he tasked the AI with writing the primary draft.

Using all of the sounds and radio communications picked up by a microphone attached to Gilbert’s body camera, the AI-powered tool produced a report in eight seconds.

“It was a better report than I could have written, and it was 100 percent accurate. It flowed smoothly,” Gilbert said. He even documented something he didn’t remember hearing — one other officer mentioning the colour of the automotive the suspects fled from.

The Oklahoma City Police Department is one in every of a handful experimenting with AI chatbots to create early drafts of incident reports. Officers who’ve tried the technology rave about the time it saves, while some prosecutors, cops and lawyers have concerns about the way it could change a fundamental document in the criminal justice system that plays a task in who gets prosecuted or jailed.

Built on the identical technology as ChatGPT and sold by Axon, best known for developing the Taser and as a number one U.S. supplier of body-worn cameras, it could prove to be what Gilbert describes as the subsequent “game-changer” in policing.

“They become police officers because they want to do police work, and spending half their day doing data entry is just a tedious part of the job that they hate,” said Axon founder and CEO Rick Smith, describing the brand new AI product — called Draft One — as having the “most positive response” of any product the corporate has launched.

“There are certainly some concerns now,” Smith added. In particular, he said, district attorneys handling criminal cases want to be sure that officers — not only an AI chat bot — are liable for writing reports, since they might have to testify in court about what they witnessed.

“They never want a police officer to stand up and say, ‘AI wrote that, I didn’t write that,’” Smith said.

AI technology will not be latest to police agencies, which have adopted algorithmic tools to read license plates, recognize suspects’ faces, detect the sounds of gunfire and predict where crimes might occur. Many of those applications are tied to privacy and civil rights concerns and attempts by lawmakers to establish safeguards. But the introduction of AI-generated police reports is so latest that there are few, if any, guardrails guiding their use.

Concerns about racial bias and stereotypes in society that might be woven into AI technology are only a few of the things Oklahoma City social activist Aurelius Francisco finds “deeply disturbing” about the brand new tool, which he learned about from the Associated Press.

“The fact that this technology is being used by the same company that supplies the department with Tasers is alarming enough,” said Francisco, co-founder of the Oklahoma City-based Foundation for the Liberation of Minds.

He said automating these reports “will make it easier for police to harass, surveil and inflict violence on members of the community. While that makes the job of a police officer easier, it makes the lives of black and brown people harder.”

Before the tool was tested in Oklahoma City, cops showed it to local prosecutors, who urged caution before using it in high-stakes criminal cases. For now, it’s getting used just for minor incidents that don’t result in an arrest.

“So no arrests, no crimes, no violent crimes,” said Oklahoma City Police Capt. Jason Bussert, who oversees information technology for the 1,170-officer department.

That’s not the case in one other city, Lafayette, Indiana, where Police Chief Scott Galloway told the AP that each one of his officers can use Draft One on any variety of case and that this system has been “extremely popular” because it began piloting earlier this yr.

Featured Stories

Or in Fort Collins, Colorado, where Sergeant Robert Younger said officers be happy to apply it to any variety of report, although they found it didn’t work well on patrols in the downtown bar district due to “overwhelming noise.”

In addition to using AI to analyze and summarize the audio recording, Axon experimented with computer vision to summarize what was “seen” in the video recording before quickly realizing the technology wasn’t ready yet.

“Given all the issues around policing, race and other identities of people involved, I think we’re going to have to do a fair amount of work before we can make that a reality,” said Smith, Axon’s CEO, describing a few of the responses tested as not “overtly racist” but otherwise insensitive.

Those experiments led Axon to focus totally on sound in the product it unveiled in April at the corporate’s annual conference for law enforcement officers.

The technology is predicated on the identical generative AI model that powers ChatGPT, created by San Francisco-based OpenAI. OpenAI is an in depth business partner of Microsoft, cloud services provider Axon.

“We use the same underlying technology as ChatGPT, but we have access to more knobs and controls than an actual ChatGPT user would have,” said Noah Spitzer-Williams, who leads Axon’s AI products. Turning off the “creativity knob” helps the model stick to the facts, so it “doesn’t embellish or hallucinate in the same way that you might find if you were just using ChatGPT,” he said.

Axon declined to say what number of police departments are using the technology. It’s not the one vendor, with startups like Policereports.ai and Truleo offering similar products. But given Axon’s deep relationships with the police departments that buy its Tasers and body cameras, experts and law enforcement officials expect AI-generated reports to develop into more common in the approaching months and years.

Before that happens, lawyer Andrew Ferguson would love to see more public discussion about the advantages and potential harms. For one, the massive language models behind AI chatbots are prone to creating false information, an issue often called hallucination, which may add convincing and hard-to-spot falsehoods to a police report.

“I worry that automation and the ease of technology will make police officers less cautious about what they write,” said Ferguson, a law professor at American University who’s working on what is anticipated to be the primary law journal article on the brand new technology.

Ferguson said the police report is very important in determining whether an officer’s suspicions “justify someone losing their liberty.” Sometimes, it’s the one testimony a judge sees, especially in misdemeanor crimes.

Ferguson said human police reports even have their flaws, however it stays an open query which one is more reliable.

For some officers who’ve tried it, it has already modified the way in which they respond to a reported crime. They talk about what is going on, so the camera higher captures what they need to record.

Bussert expects that as technology improves, officers will develop into “more and more verbose” in describing what they’ve in front of them.

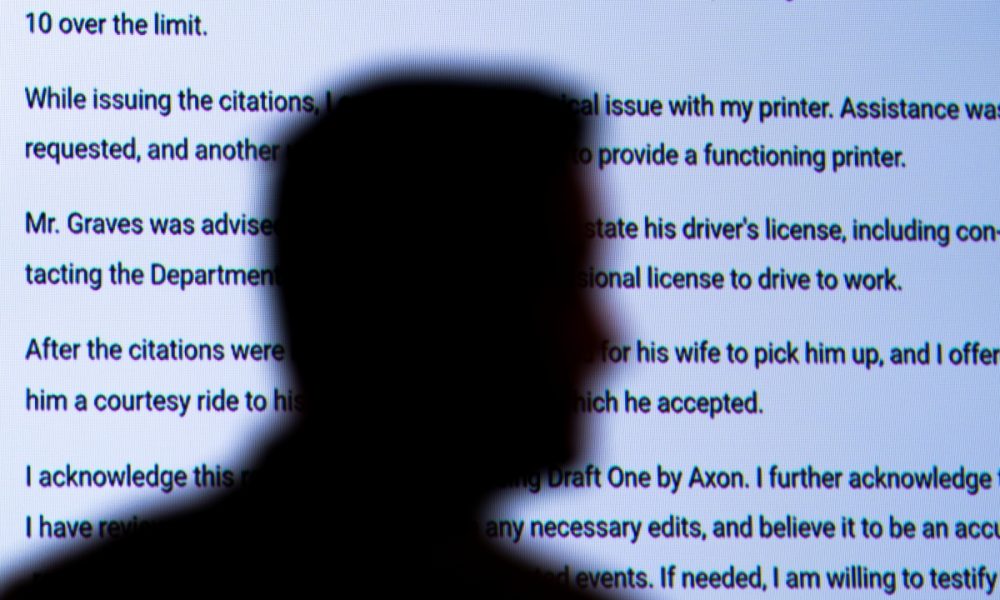

After Bussert loaded the traffic stop footage into the system and pressed a button, this system generated a narrative report, written in conversational language, with dates and times—similar to a police officer would, typing them in from his notes—all based on the audio from the body camera.

“It was literally a few seconds,” Gilmore said, “and it got to the point where I thought, ‘I don’t have anything to change anymore.’”

At the top of the report, the officer must check a box indicating that the report was generated using artificial intelligence.